Why GPUs Matter in AI Workloads

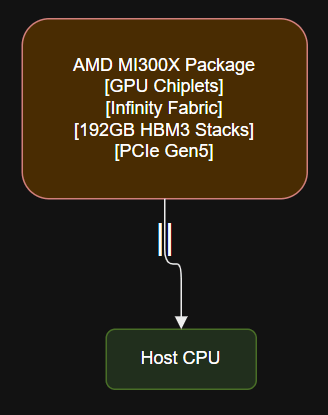

AMD’s Instinct MI300X, announced for production in 2025, is built on the CDNA 3 architecture. It features a chiplet design, combining GPU and HBM stacks on a single package for maximum throughput and memory capacity.

This article reviews the top five latest GPUs of 2025 that are shaping the AI landscape, providing a detailed analysis and side-by-side comparison to help you make informed decisions.

Third-Party Review:

ServeTheHome MI300X Review

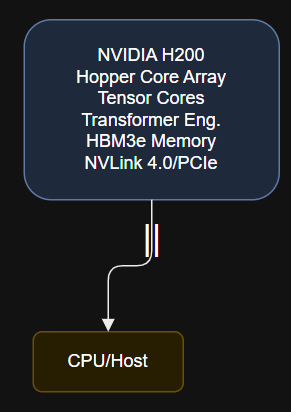

NVIDIA H200

Selecting the right GPU or AI accelerator is pivotal for optimizing the performance, efficiency, and total cost of ownership for AI initiatives. Each of these 2025 models is engineered to address distinct workload challenges, whether you are running multi-modal foundation models, scaling inference, deploying on-premises workstations, or leveraging cloud-native infrastructure. By carefully evaluating your use case, software stack, and scaling requirements, you can harness the full potential of AI innovation in the years ahead.

Architecture Overview

Official Product Page: Intel Gaudi 3 AI Accelerator

Performance

- AI Throughput: Up to 1.2 PFLOPS (FP8), 120 TFLOPS (FP16)

- Memory: 141 GB HBM3e, up to 4.8 TB/s bandwidth

- Power Draw: 700 Watts (typical)

- Key Features: Transformer Engine, 4th-gen NVLink, Multi-Instance GPU (MIG) support

Software Ecosystem

- CUDA 12.x, cuDNN, TensorRT, NCCL, RAPIDS

- Deep integration with major ML/DL frameworks (TensorFlow, PyTorch, JAX)

Real-World Use Cases

- Training and inference for large language models (LLMs)

- GenAI, computer vision, data analytics at scale

- HPC and scientific computing

Pros and Cons

- Exceptional AI performance for both training and inference

- Unmatched memory bandwidth for large models

- High power consumption, significant cooling requirements

Architecture, Data Flow

In the rapidly evolving field of artificial intelligence, the importance of GPUs cannot be overstated. GPUs, or Graphics Processing Units, are designed for parallel processing, making them exceptionally well-suited for the data-intensive and compute-heavy requirements of modern AI workloads. Whether you are training massive language models, deploying computer vision applications, or optimizing inference at scale, the right GPU can dramatically accelerate both development and deployment cycles.

AMD Instinct MI300X

Official Product Page: NVIDIA H200 Tensor Core GPU

Architecture Overview

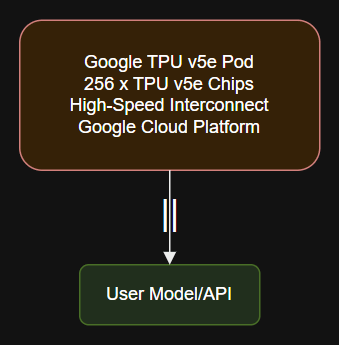

The Google TPU v5e is Google’s most recent cloud-based AI accelerator. It is designed to offer scalable, energy-efficient performance for both training and inference. The v5e generation brings improvements in cost-efficiency and deployment flexibility.

Performance

- AI Throughput: Up to 1.0 PFLOPS (FP8), 180 TFLOPS (FP16)

- Memory: 192 GB HBM3, 5.2 TB/s bandwidth

- Power Draw: 750 Watts

- Key Features: Advanced Infinity Fabric, multi-GPU scaling

Software Ecosystem

- ROCm 6.x, HIP, PyTorch and TensorFlow optimized

- Strong support for open-source AI and HPC frameworks

Real-World Use Cases

- Multi-modal LLMs, foundation model training

- Large-scale inference, scientific simulations

- Cloud and on-premises data centers

Pros and Cons

- Market-leading memory capacity, ideal for extremely large models

- Robust open-source software stack

- Slightly lower single-GPU throughput than NVIDIA H200

Chiplet Design

Official Product Page: AMD Instinct MI300 Series

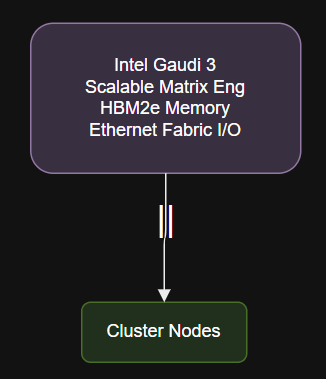

Intel Gaudi 3

AI workloads are not homogeneous. Deep learning training, for example, requires immense memory bandwidth and computational throughput, while inference workloads demand efficiency and low latency. Similarly, edge AI focuses on power efficiency, and data analytics workloads benefit from high memory capacity and scalable architectures. As new models and frameworks emerge, GPU vendors have introduced innovative architectures to address the diverse needs of enterprises, researchers, and developers.

Architecture Overview

Released in late 2024 and gaining widespread adoption in 2025, the NVIDIA H200 is based on the Hopper architecture. This GPU builds on the success of the H100, offering higher bandwidth memory (HBM3e), improved tensor core performance, and advanced AI features tailored for both training and inference.

Performance

- AI Throughput: Up to 1.5 PFLOPS (BF16), 96 TFLOPS (FP16)

- Memory: 128 GB HBM2e, 3.6 TB/s bandwidth

- Power Draw: 600 Watts

- Key Features: Integrated networking, advanced tensor engines, native Ethernet

Software Ecosystem

- SynapseAI, TensorFlow, PyTorch, ONNX Runtime

- Native support for popular AI libraries

Real-World Use Cases

- Scalable training and inference clusters

- Computer vision, speech recognition, enterprise AI

Pros and Cons

- High scalability with Ethernet-based fabric

- Competitive pricing, solid performance-per-watt

- Smaller memory pool than AMD MI300X

Data Flow

Official Product Page: Google Cloud TPU v5e

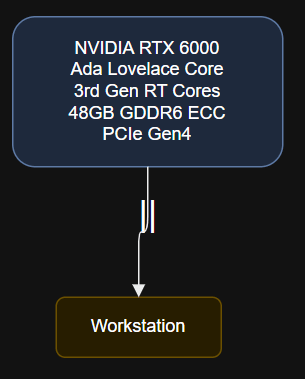

NVIDIA RTX 6000 Ada

Third-Party Review:

Google Cloud TPU v5e Documentation

Architecture Overview

Gaudi 3 is Intel’s latest purpose-built AI accelerator, designed for performance and efficiency in both training and inference. It leverages an innovative scalable matrix engine and high-speed Ethernet interconnect.

Performance

- AI Throughput: 1,398 TFLOPS (Tensor, FP8), 91.1 TFLOPS (FP32)

- Memory: 48 GB GDDR6 ECC, 960 GB/s bandwidth

- Power Draw: 300 Watts

- Key Features: Third-generation RT cores, DLSS 3.0, Ada Lovelace tensor cores

Software Ecosystem

- CUDA 12.x, OptiX, TensorRT, DirectML

- Extensive support for professional applications and AI toolkits

Real-World Use Cases

- AI research, content creation, digital twins

- On-premises inference, rapid prototyping

Pros and Cons

- Best-in-class workstation GPU for AI and graphics

- Lower power draw, fits standard workstations

- Not ideal for ultra-large-scale training tasks

GPU Core

Third-Party Review:

AnandTech H200 Review

Google TPU v5e

Third-Party Review:

Tom’s Hardware Gaudi 3 Preview

Architecture Overview

Third-Party Review:

Puget Systems RTX 6000 Ada Review

Performance

- AI Throughput: Up to 140 TFLOPS (BF16/FP16) per chip

- Memory: 64 GB HBM2e per chip

- Power Draw: Cloud managed (energy-efficient design)

- Key Features: 256 TPU v5e chips per pod, high-speed interconnect

Software Ecosystem

- TensorFlow, JAX, PyTorch (via XLA)

- Deep integration with Google Cloud services

Real-World Use Cases

- Large-scale training and inference on Google Cloud

- ML model serving, research workloads

Pros and Cons

- Seamless cloud scaling, no local hardware needed

- Cost-effective for burst workloads

- Less control compared to on-premises GPUs

Cloud TPU Pod

Official Product Page: NVIDIA RTX 6000 Ada Generation

| Model | Architecture | Year | AI Perf. (TFLOPS/PFLOPS) | Memory (GB) | Power (W) | Software | Best Workloads | Price | Official Link |

|---|---|---|---|---|---|---|---|---|---|

| NVIDIA H200 | Hopper | 2025 | 1.2 PFLOPS (FP8) | 141 HBM3e | 700 | CUDA, TensorRT | LLM, GenAI, HPC | Premium | NVIDIA |

| AMD MI300X | CDNA 3 | 2025 | 1.0 PFLOPS (FP8) | 192 HBM3 | 750 | ROCm, HIP | Foundation models, Science | Premium | AMD |

| Intel Gaudi 3 | Gaudi | 2025 | 1.5 PFLOPS (BF16) | 128 HBM2e | 600 | SynapseAI | Scale clusters, Vision | Competitive | Intel |

| RTX 6000 Ada | Ada Lovelace | 2025 | 1,398 TFLOPS (FP8) | 48 GDDR6 | 300 | CUDA, OptiX | Workstation, Content, AI | High-End | NVIDIA |

| Google TPU v5e | TPU | 2025 | 140 TFLOPS (BF16/FP16) | 64 HBM2e | Cloud | TensorFlow, XLA | Cloud-scale AI, Serving | Pay-as-you-go |

Conclusion

The RTX 6000 Ada is built on NVIDIA’s Ada Lovelace architecture and targets professional workstations. It offers a balance of AI, graphics, and simulation capabilities, making it suitable for researchers and developers.