Introduction

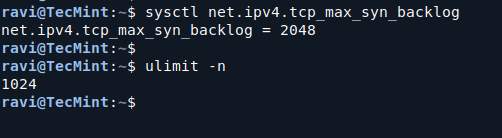

A simple chat session using system and user roles with an OpenAI-compatible API:

In both simple applications and complex agentic systems, role-based formatting is essential for reliable, context-aware, and effective LLM outcomes. This article will demystify the core roles in LLM conversation, explore advanced agentic roles, and walk through hands-on examples for developers.

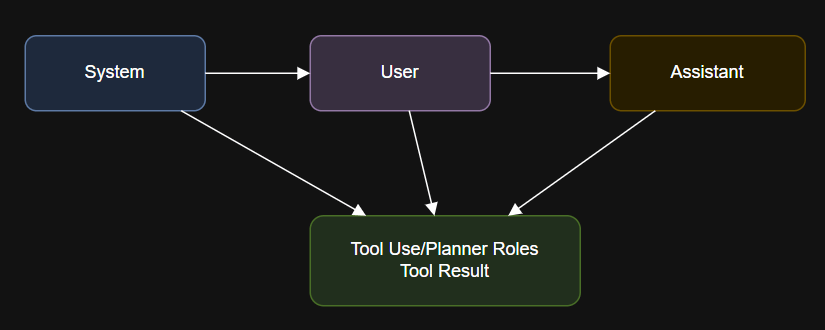

At the heart of LLM chat and agent applications is the idea of roles. Each message is tagged by role—system, user, assistant, or special agentic types—to make context and intent crystal clear. This helps the model track history, interpret meaning, and produce consistent, relevant outputs.

The Fundamentals: Roles in LLM Conversations

Role-based formatting is essential for building LLM-powered chatbots, agents, and automated workflows. By structuring every interaction around clear roles, you unlock stability, context awareness, and the ability to automate reasoning and tool use.

Whether you are working with basic chat or advanced agentic systems, start by getting roles right, your AI will become more reliable, interpretable, and effective.

Core Roles

- System:

Sets instructions, tone, or rules for the session.

Example:

“You are a financial advisor. Always recommend conservative strategies.” - User:

The person’s input, question, or command.

Example:

“How should I invest $5,000 for long-term growth?” - Assistant:

The LLM’s reply, influenced by the system message and latest user input.

Example:

“For long-term growth, consider diversified index funds and consistent contributions.”

Advanced Roles for Agents

tools = [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Returns the current weather for a city.",

"parameters": {

"type": "object",

"properties": {

"location": {"type": "string", "description": "City name"}

},

"required": ["location"]

}

}

}

]

messages = [

{"role": "system", "content": "You are a weather assistant with access to a weather tool."},

{"role": "user", "content": "What is the weather in London today?"}

]

# LLM decides to call the tool

response = client.chat.completions.create(

model="your-llm-model",

messages=messages,

tools=tools,

tool_choice="auto"

)

# App executes the tool, then adds:

messages.append({"role": "tool", "content": '{"weather":"Cloudy, 16°C."}'})

# LLM generates the final user-facing answer using this tool result.

3. Agent Development Frameworks

What sets successful LLM-powered solutions apart is not just the raw size or architecture of the model, but how you design the interaction. The secret is in the structure: defining clear conversational roles so that the model always knows who is speaking, what their intent is, and how the conversation has unfolded so far.

- Context Clarity: Clearly distinguishes instructions, user intent, model output, and external actions.

- Stable Conversations: Prevents drift, loss of memory, or confusion in multi-turn dialogs.

- Advanced Workflows: Enables reasoning, tool-calling, and agent planning by separating each type of message.

- Modularity: Makes it easier to debug, extend, and automate complex flows.

How Roles Drive Agentic Systems

Large Language Models (LLMs) have redefined how we communicate with machines. From friendly chatbots to multi-step AI agents that reason, plan, and interact with external tools, these models are powering a new generation of intelligent systems.

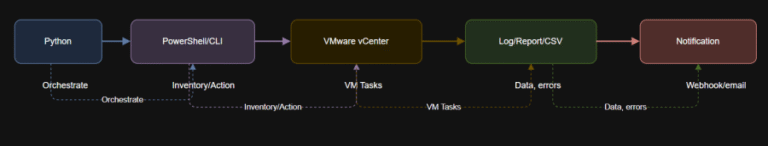

- Memory: The full message history, tagged by role, is sent with every LLM call. This enables both short-term and long-term memory.

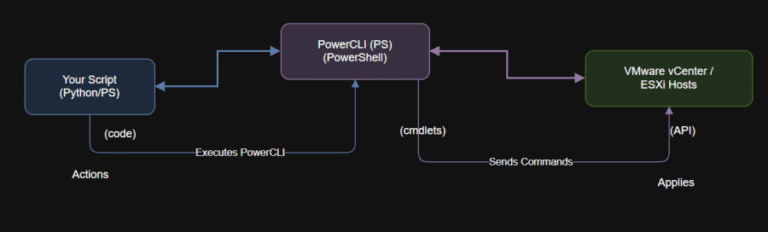

- Tool Use: The model signals a tool call (like

get_weather), and your app executes it, then attaches the result back with a tool role. - Reasoning and Planning: By splitting messages into steps and roles (system, planner, tool, result), the agent can decompose, act, and revise intelligently.

- Multi-Agent Collaboration: Different agent roles (planner, executor, researcher) can be implemented for advanced workflows.

Role Based Conversation Structure

Modern agentic systems require more nuanced structure, especially when the model calls tools, reasons step by step, or plans actions.

Examples: Role Based Prompting in Practice

1. Core Conversational Roles

Using roles effectively transforms your LLM application. Here is why:

messages = [

{"role": "system", "content": "You are a travel assistant. Suggest eco-friendly trips."},

{"role": "user", "content": "Where should I go for a sustainable vacation in Europe?"}

]

response = client.chat.completions.create(

model="your-llm-model",

messages=messages

)

Enabling LLMs to fetch real-world data using additional roles:

This structure ensures clarity, memory, and robust agentic flows.

Conclusion

In agent-based architectures, roles become the backbone of the entire workflow.

Here is how modern agentic setups leverage roles: