November 15, 2020

NSX-T Transport VLANs and Inter TEP Communication | LAB2PROD

Inter TEP Communication, Sharing Transport VLANs: Unpacking One of The Latest Features In NSX-T 3.1

How TEPs Communicate?

Table of Contents

- What are TEPs and Transport VLANs?

- How they communicate: Both host to host and host to Edge Appliances

- How TEPs need to be configured when the Edge Appliances reside on a host transport node in releases prior to NSX-T 3.1

- A streamlined method to TEP configuration NSX-T 3.1

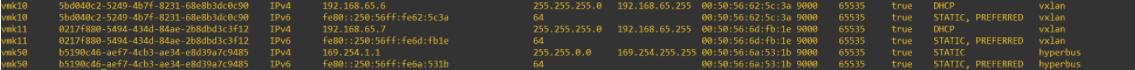

TEP interfaces on the edge appliance, showing the same IP range.

This is a WireShark view of the same to make reading easier.

What are Tunnel Endpoints (TEPs) and Transport VLANs?

The above has demonstrated how hosts communicate via their TEP IP addresses, however this behaviour is the same for host to edge communication as well.

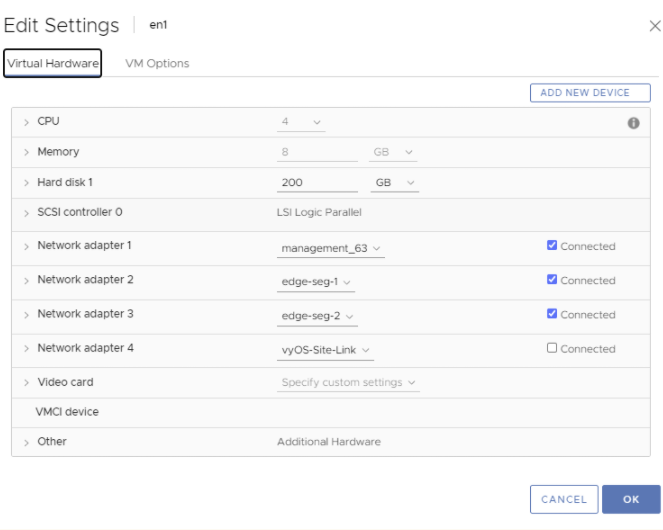

Next I will attach these segments as the uplinks for the edge transport node VM’s and reconfigure the profiles to use the same Transport VLAN (65).

TEP IP Assignment:

- Host transport node TEP IP addresses can be assigned to hosts statically, using DHCP or using predefined IP Pools in NSX-T.

- Edge Appliances can have their TEP IP addresses assigned statically or using a pool.

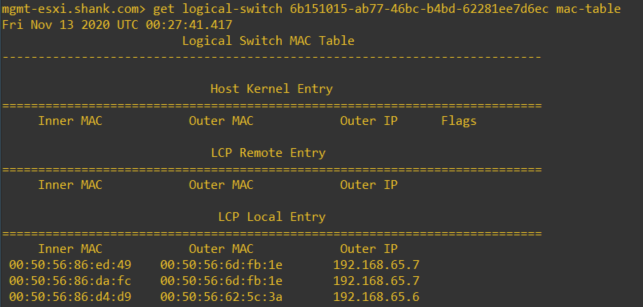

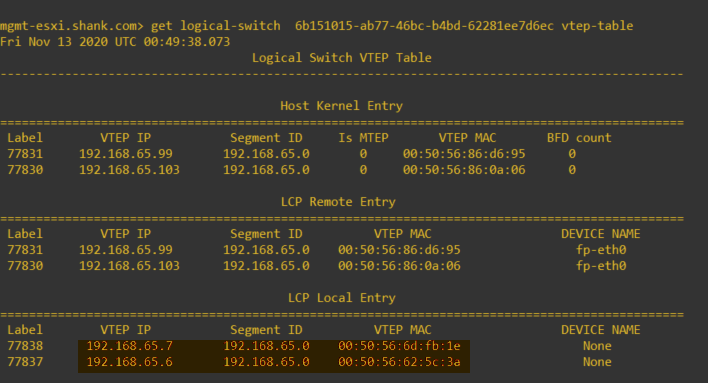

Notice how here we have a column for Inner MAC, Outer MAC and Outer IP under the LCP section, also how the MAC addresses have been spread across the two TEP IPs. If we dig a little deeper, we can start by identifying what exactly those inner MACs are, where they came from and then repeat the same for the outer MAC.

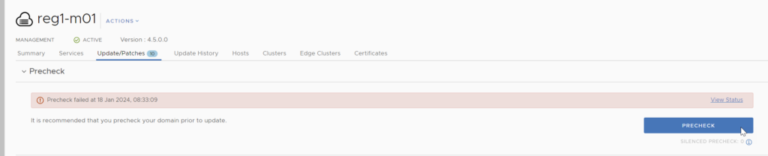

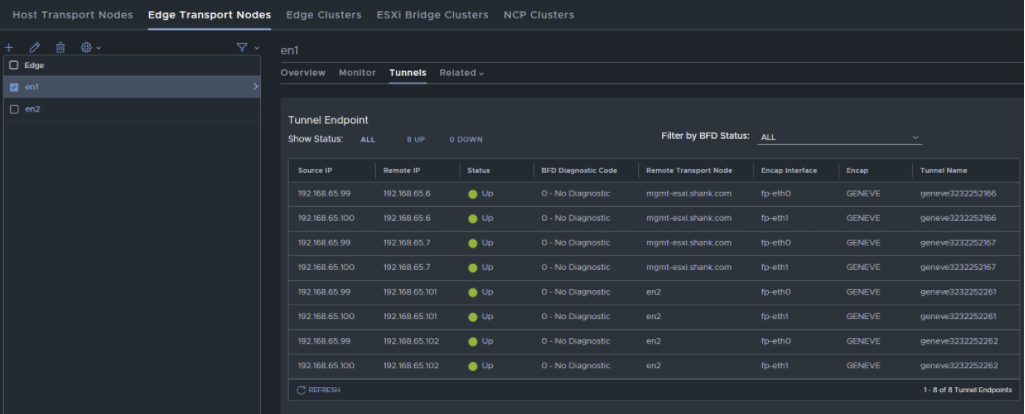

And now if we go back into the UI, the tunnels are up.

Once the packet is decapsulated the payload is exposed and actioned accordingly. This article focusses on TEPs and will not go into logical routing and switching of payload data.

The screenshot below shows their configuration in NSX-T.

Below is the ARP table from the top of rack switch, here you can see it has learnt the TEP IP addresses from the hosts, the MAC addresses listed are the MAC addresses of the hosts vmkernel ports or the edge appliances vmnic.

The IP packets had to be routed from the host transport nodes to the Edge appliance and vice versa, that is the GENEVE packets had to ingress/egress on a physical uplink from the host as there was no datapath within the host for the GENEVE tunnels.

The example below is the same output from above, however outputting the capture to a file and opened in Wireshark for easier reading.

TEP’s are the source and destination IP addresses used in the IP packet for communication between host transport nodes and Edge Appliances. Without correct design, you will more than likely have communication issues between your host transport nodes participating in NSX-T and your Edge Appliances, this will lead to the GENEVE tunnel between endpoints being down. If your GENEVE tunnels are down you will NOT have any communication between the endpoints on either side of the downed tunnel.

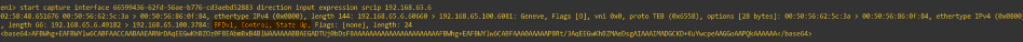

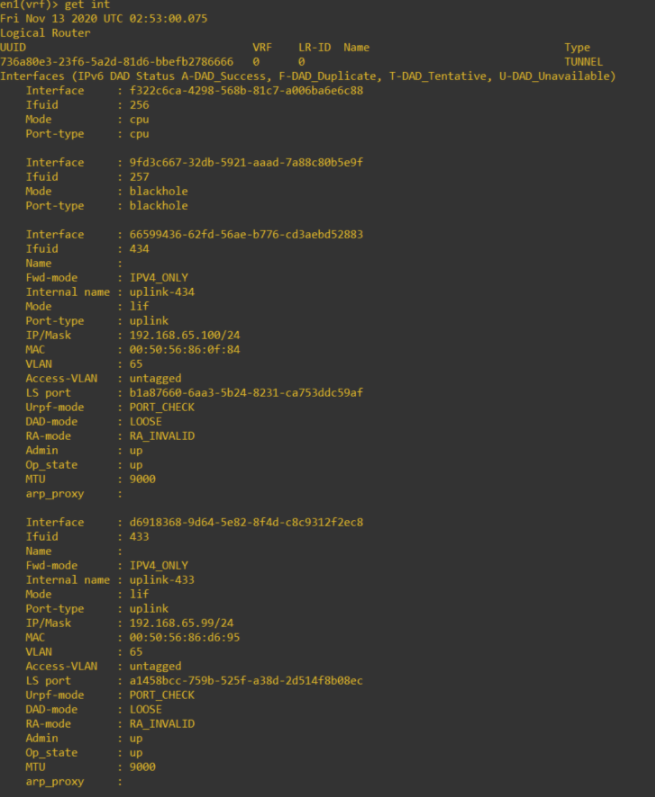

Provided below is another packet capture on the Edge appliance,if you want to single out a TEP interface, SSH into the appliance as admin, type in vrf 0 and then get interfaces. In the list, there is a field called interface. Copy the interface ID of the one you wish to run the capture on. The command to use is “start capture interface <id> direction dual file <filename>”.

Provided below is another packet capture on the Edge appliance,if you want to single out a TEP interface, SSH into the appliance as admin, type in vrf 0 and then get interfaces. In the list, there is a field called interface. Copy the interface ID of the one you wish to run the capture on. The command to use is “start capture interface <id> direction dual file <filename>”.

NSX-T TEPs communicate with each other by using their source and destination addresses. The TEPs can be in Transport VLANs (different subnets) and still communicate, if this is part of your requirements then you must ensure those Transport VLANs (subnets) are routable and can communicate.

TEP’s are a critical component of NSX-T, each hypervisor participating in NSX-T (known as a host transport node) and Edge Appliance will have at minimum one TEP IP address assigned to it. The host transport nodes and Edge Appliances may have two for load balancing traffic, this is generally referred to as multi-TEP.

The below screenshot is of en1 and it’s NIC configuration.

The video below shows Tunnel Endpoint and Logical Routing operations, it also walks through some troubleshooting.

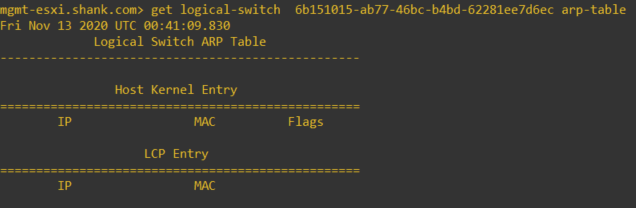

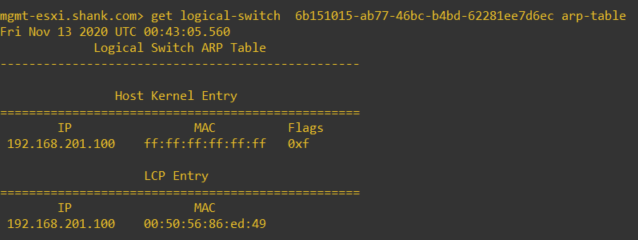

Excellent, now we have an entry in the table, if we scroll up to the first image you can now see that the inner MAC is the same as the MAC address from this entry. So that means the inner MAC is the source VM’s vmnic’s MAC address.

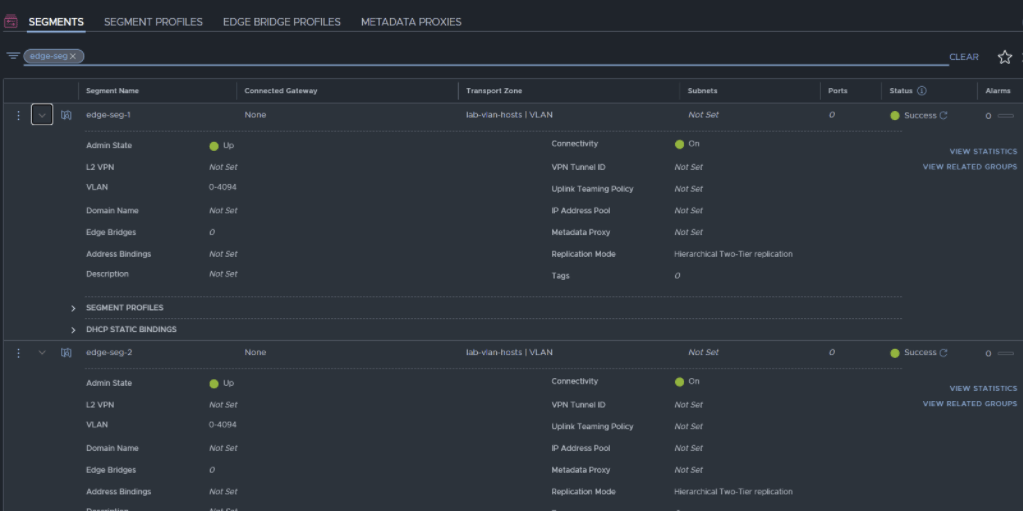

As we saw earlier in this post, even in NSX-T 3.1 when the edge TEPs are in the same Transport VLAN as the host TEPs and not on a separate vDS and set of NICs, inter TEP communication does not work. You may have also noticed, above the vDS portgroups for the edges there were two additional VLAN-backed segments created in NSX-T, they were labelled ‘edge-seg-1’ and ‘edge-seg-2’.

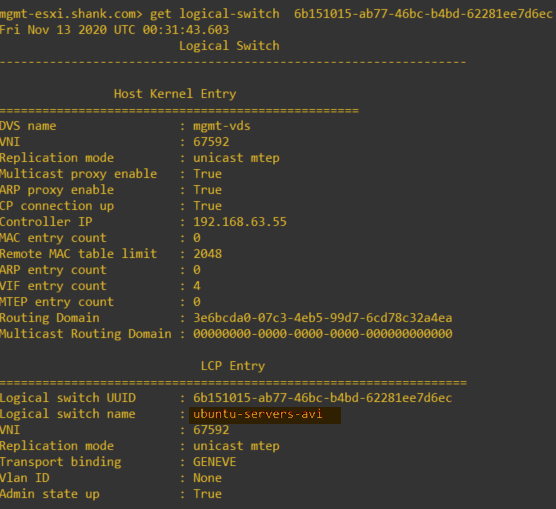

Host transport node with TEP IP’s in the same range.