January 5, 2022

NSX Application Platform Part 3: NSX-T, NSX-ALB (Avi), and Tanzu

NSX-T Logical Networking, Ingress, Load balancing, and Tanzu Kubernetes Grid Service (TKGS)

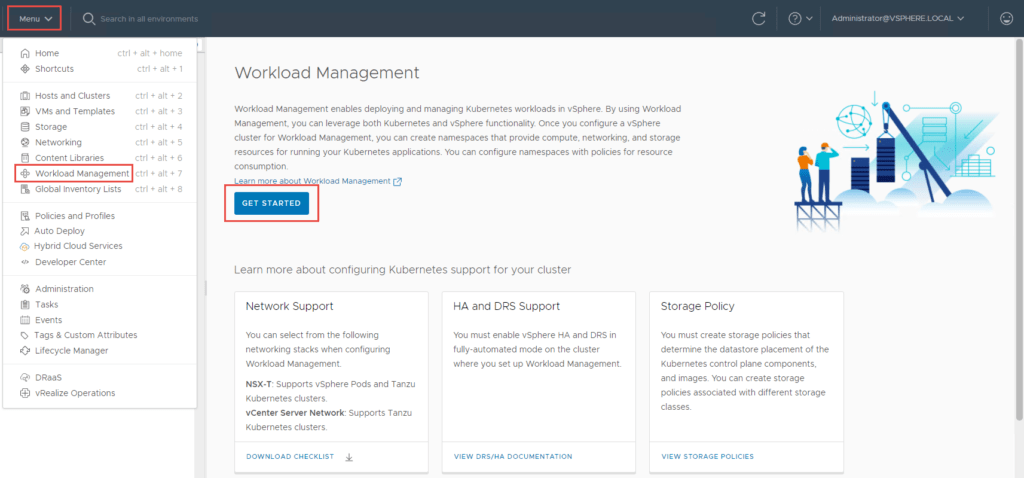

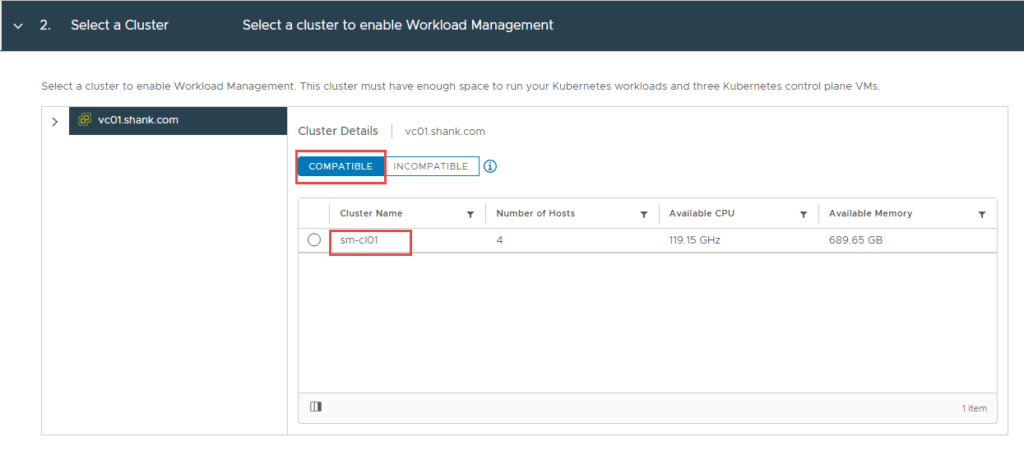

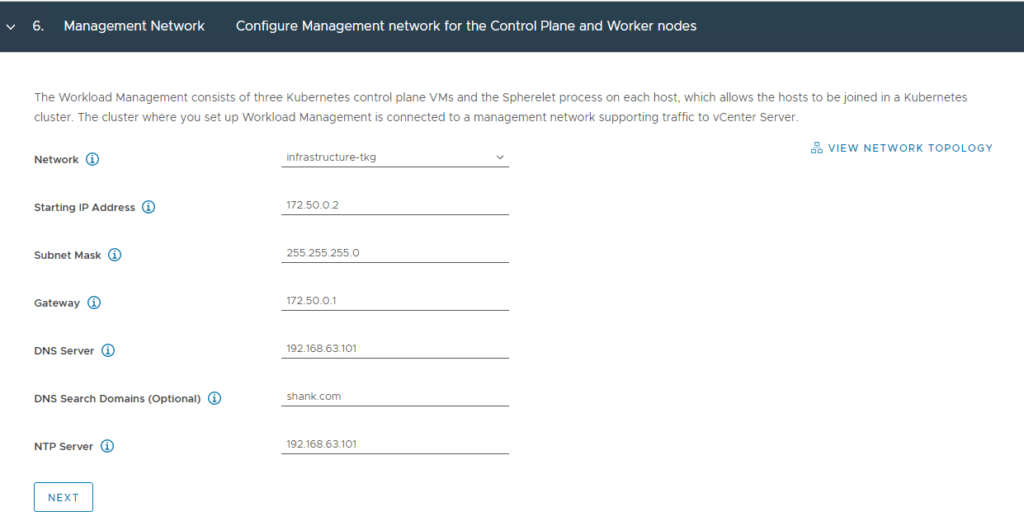

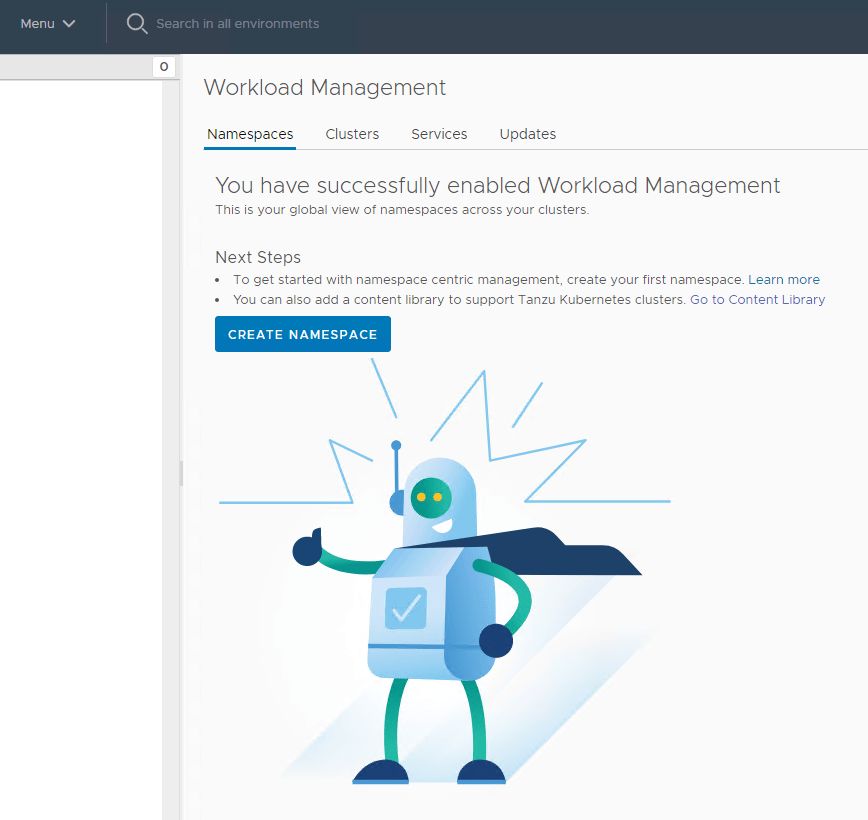

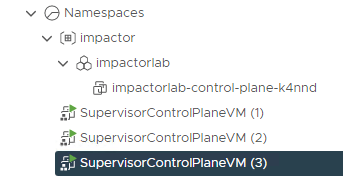

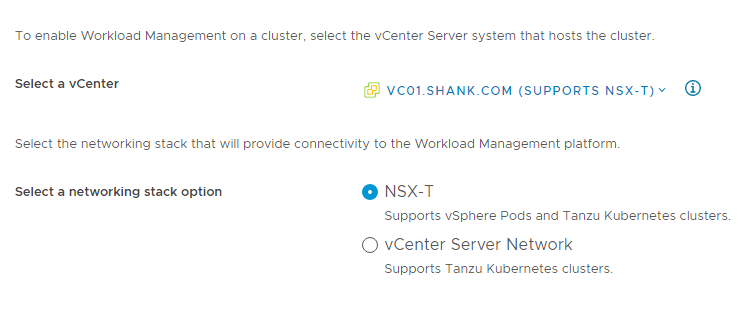

With all the pre-work now complete, we are ready to configure the Supervisor Control Plane for TKGS.

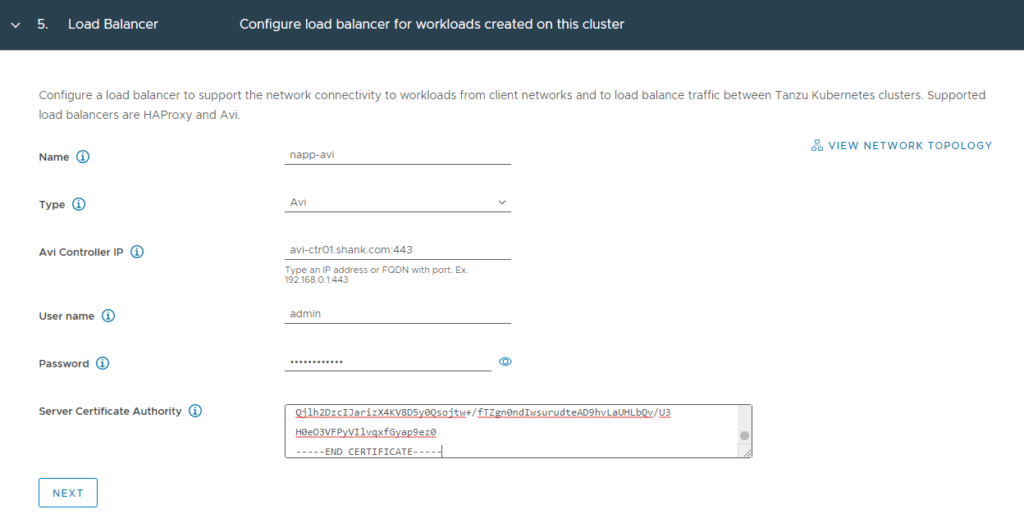

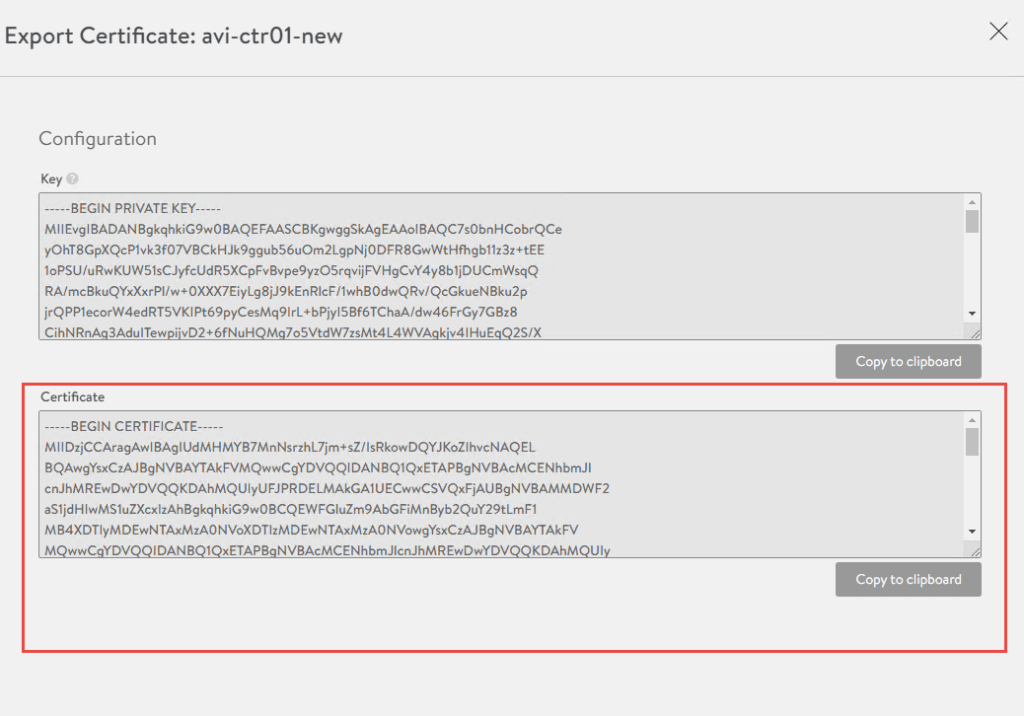

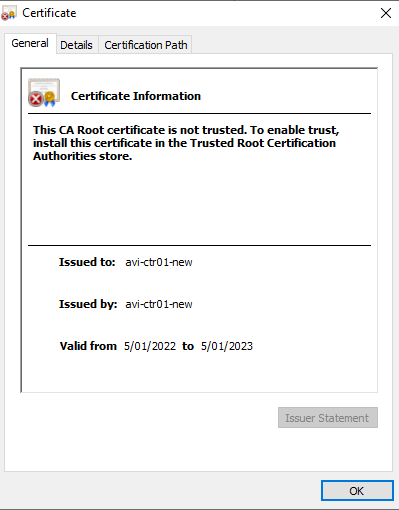

4. We will need this certificate in a later step, so in the below screen click on the little down arrow next to the certificate and copy the certificate to notepad.

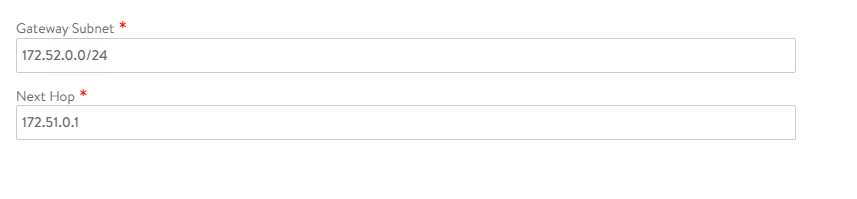

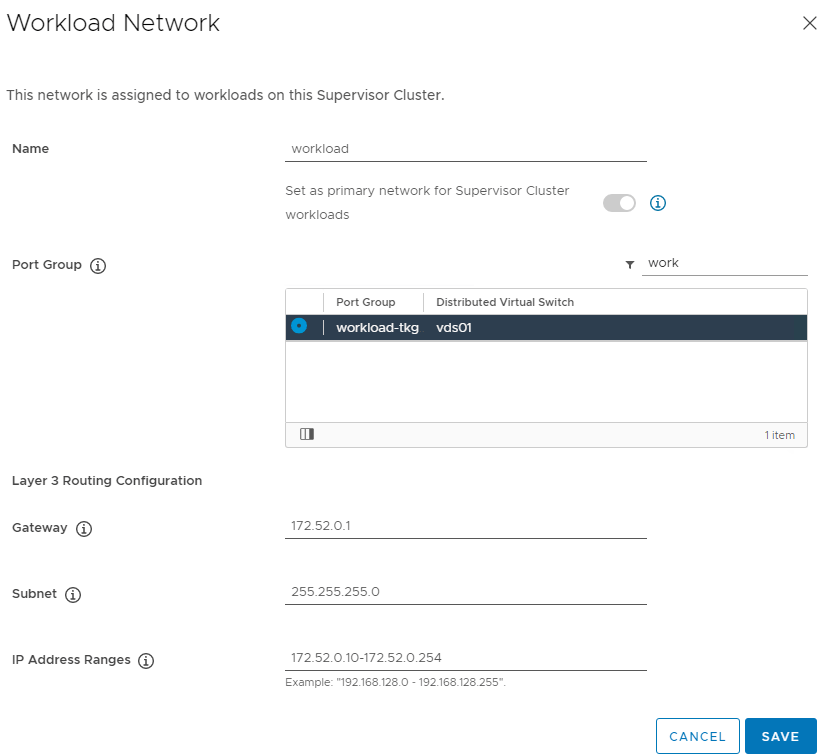

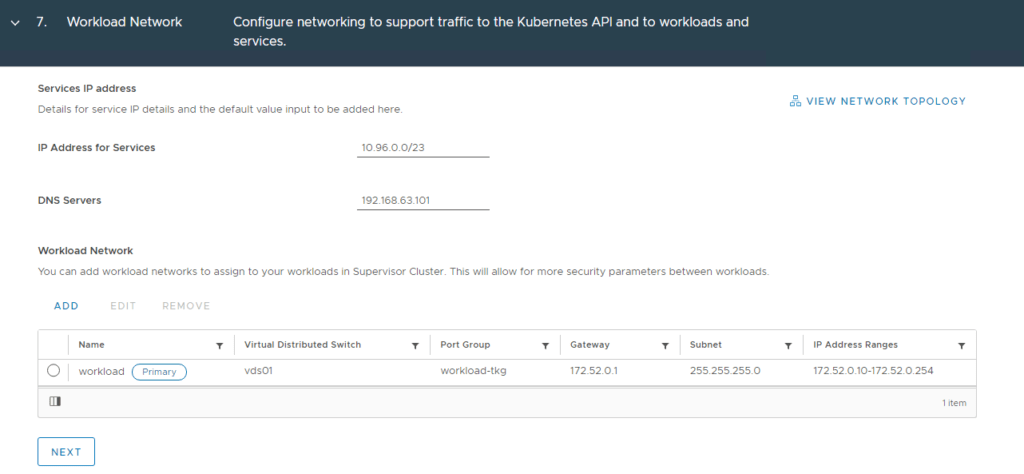

The workload network will be assigned to workload clusters deployed from the supervisor cluster, in my example this will be the “workload-tkg (172.52.0.0/24)” network. Click Save then Next when complete.

NSX-T Networking

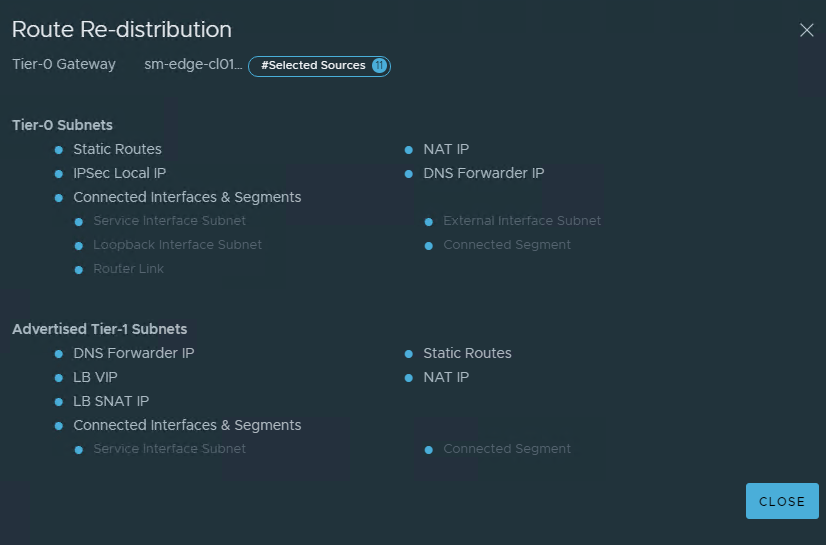

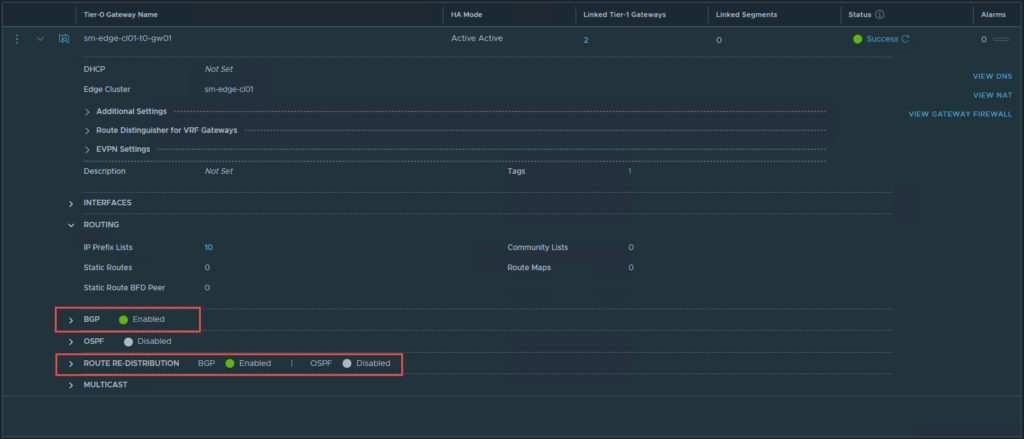

The Tier-0 gateway will be responsible for making the networks available to the physical network, in this case BGP is used, however, you may use static routes or OSPF. The screenshots below display the basic configuration required on the Tier-0 gateway, similar to the Tier-1. At a minimum “Connected Interfaces & Segments” will need to be enabled for route re-distribution.

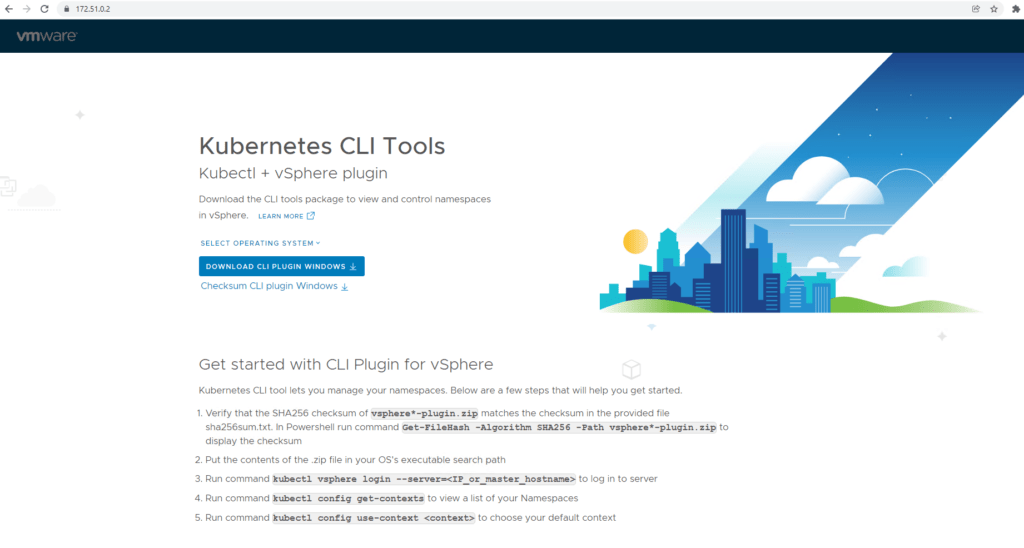

root@jump:~# kubectl vsphere login --server 172.51.0.2 -u [email protected] --insecure-skip-tls-verify

KUBECTL_VSPHERE_PASSWORD environment variable is not set. Please enter the password below

Password:

Logged in successfully.

You have access to the following contexts:

172.51.0.2

If the context you wish to use is not in this list, you may need to try

logging in again later, or contact your cluster administrator.

To change context, use `kubectl config use-context <workload name>`

#### Changing context to the supervisor context

root@jump:~# kubectl config use-context 172.51.0.2

## Check the namespace

root@jump:~# kubectl get ns

NAME STATUS AGE

default Active 79m

kube-node-lease Active 79m

kube-public Active 79m

kube-system Active 79m

svc-tmc-c8 Active 76m

vmware-system-ako Active 79m

vmware-system-appplatform-operator-system Active 79m

vmware-system-capw Active 77m

vmware-system-cert-manager Active 79m

vmware-system-csi Active 77m

vmware-system-kubeimage Active 79m

vmware-system-license-operator Active 76m

vmware-system-logging Active 79m

vmware-system-netop Active 79m

vmware-system-nsop Active 76m

vmware-system-registry Active 79m

vmware-system-tkg Active 77m

vmware-system-ucs Active 79m

vmware-system-vmop Active 77m

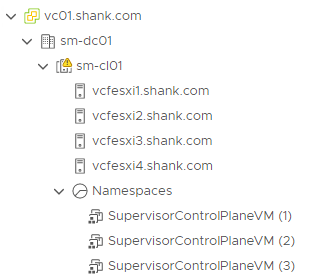

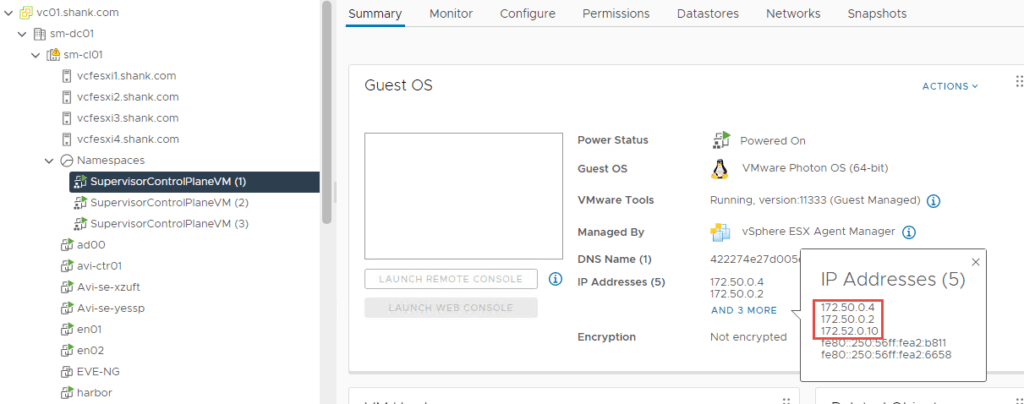

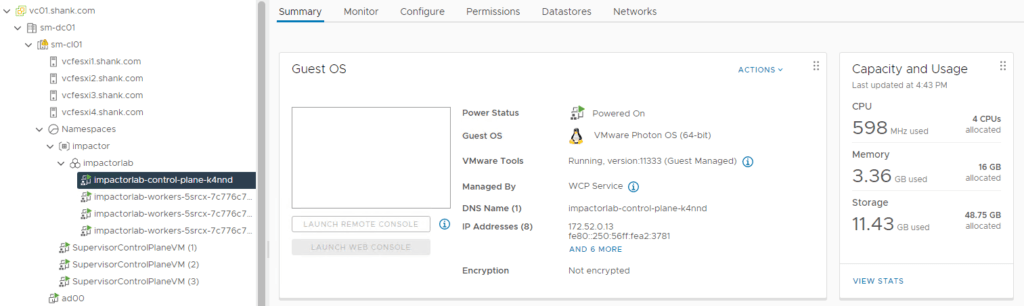

To check the addresses assigned to the supervisor control plane VMs, click on any of them. Then click More next to the IP addresses.

I have recently put together a video that provides clear guidance on deploying NAPP, it can be seen here.

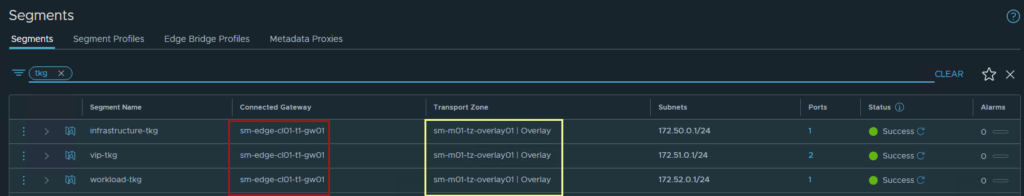

NSX-T Segments

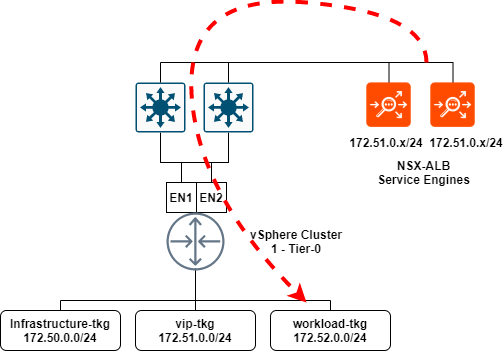

To summarise the NSX-T and NSX-ALB section: I have created segments in NSX-T which are presented to vCenter and NSX-ALB. These segments will be utilized for the VIP network in NSX-ALB, as well as workload and front-end networks required for TKGS. The below illustration shows the communication from service engines to the NSX-T workload-tkg segment, and eventually the workload clusters that will reside on the segment.

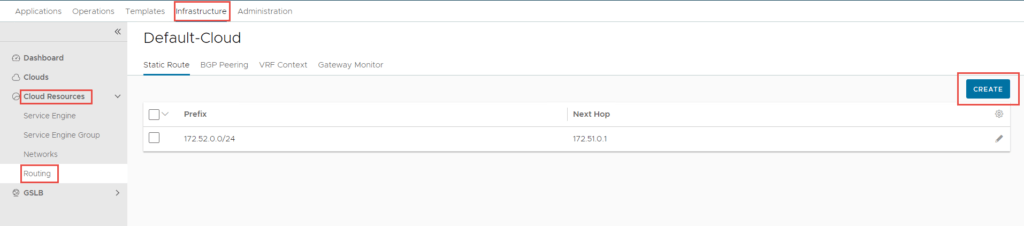

To create the static route, navigate to Infrastructure -> Cloud Resources -> Routing -> Click Create.

NSX-T Gateways

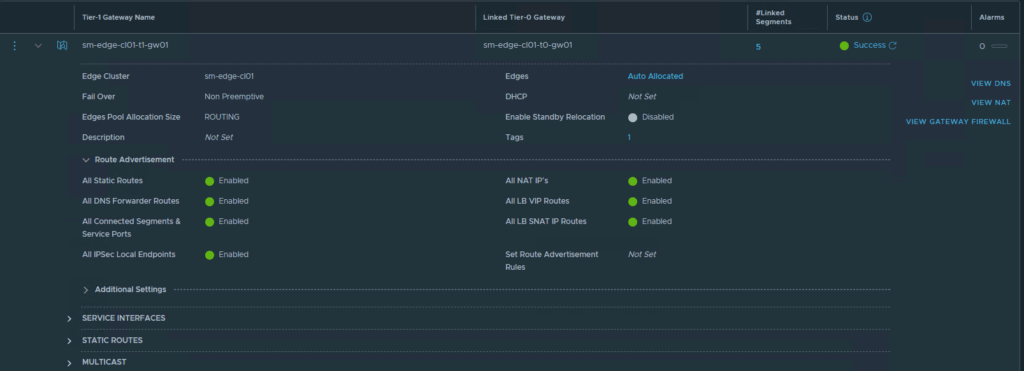

Tier-1 Gateway

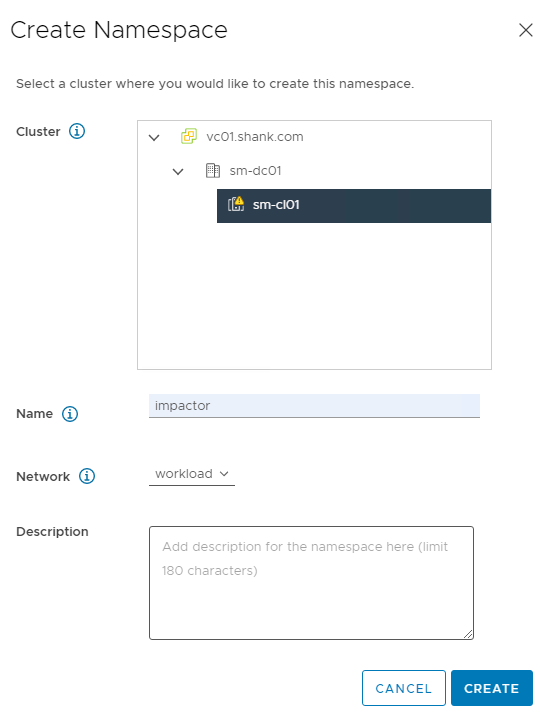

3.4 Fill in the required specifications and then click Create.

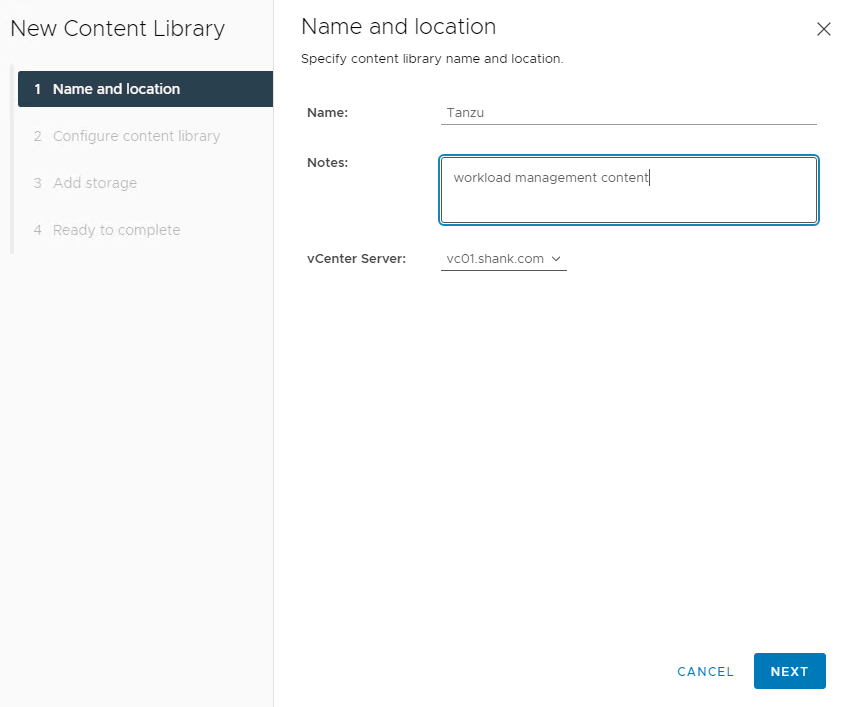

5. On the next screen, select Subscribed content library and enter the URL, leave Download content set to immediately.

Tier-0 Gateway

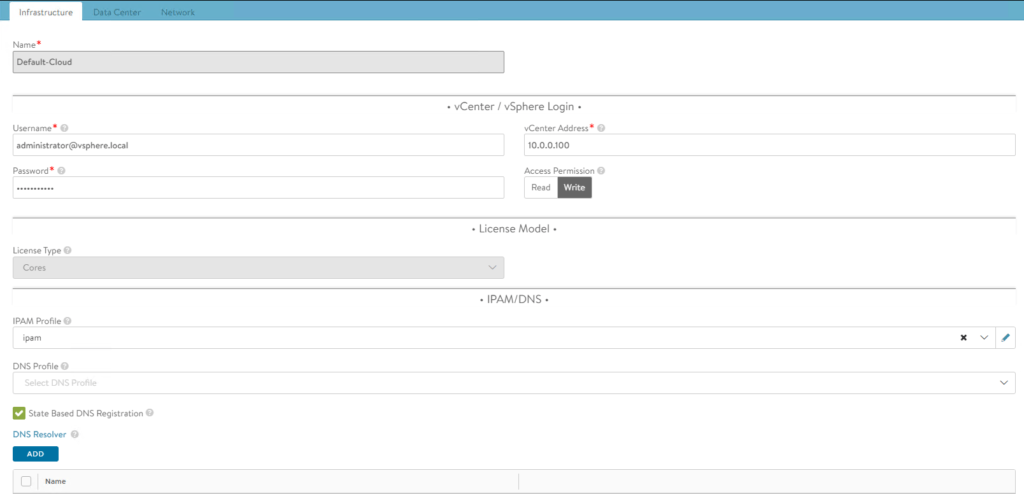

The IPAM profile will be covered in one of the following sections.

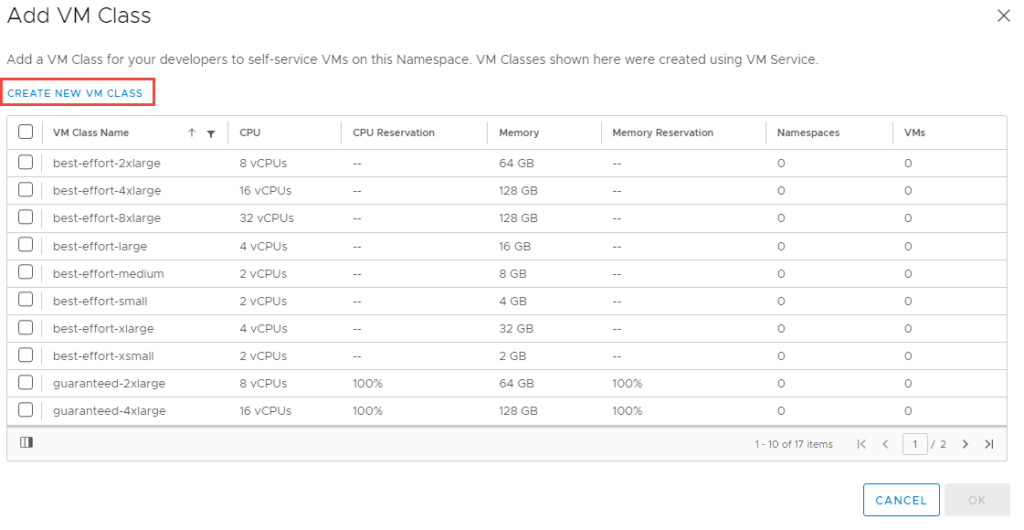

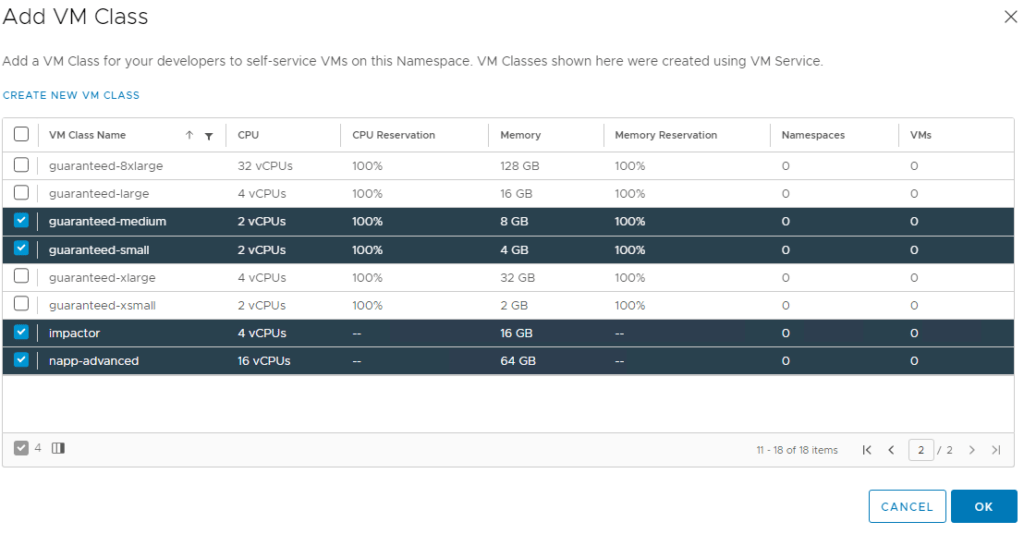

You should now be able to see the VM Class you just created, I have selected the one I just created, as well as some others. Click Ok when you are done.

NSX Advanced Load Balancer (NSX-ALB/ Avi)

The below screenshot shows the configuration of my IPAM profile.

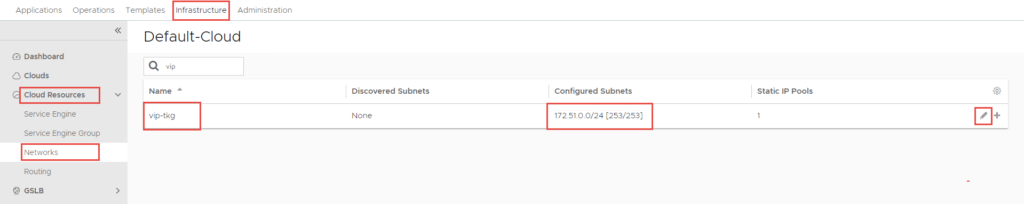

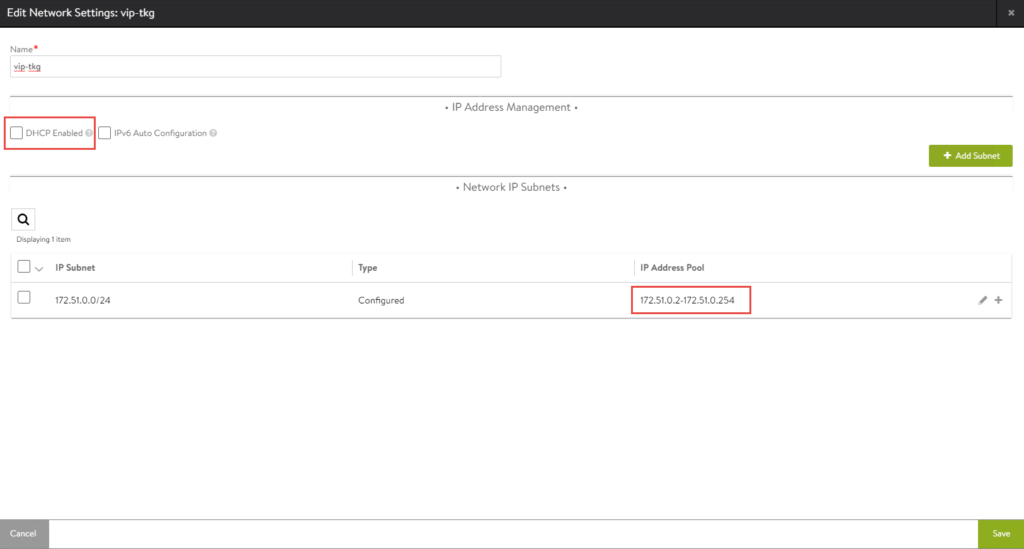

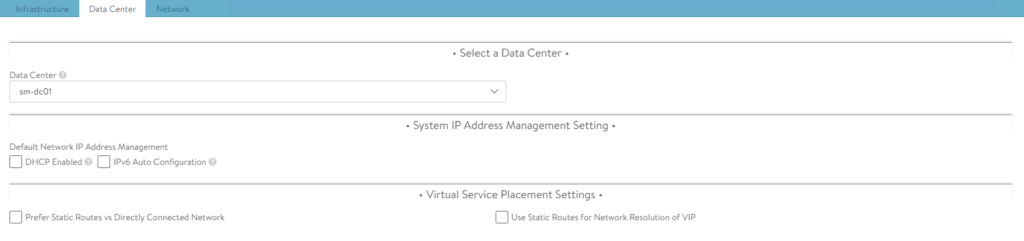

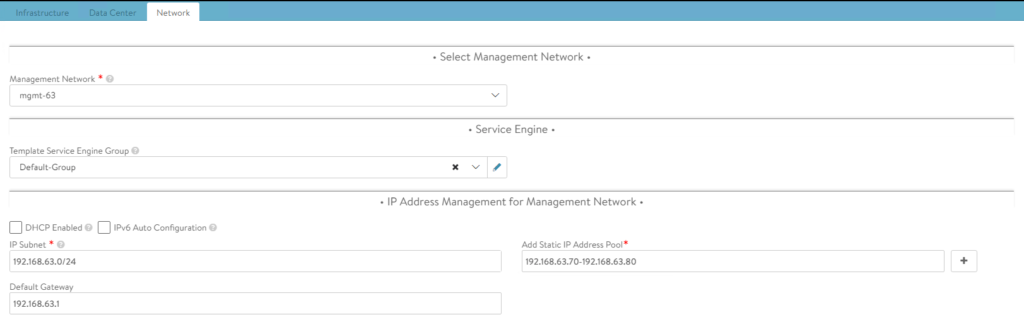

NSX-ALB Default-Cloud Configuration

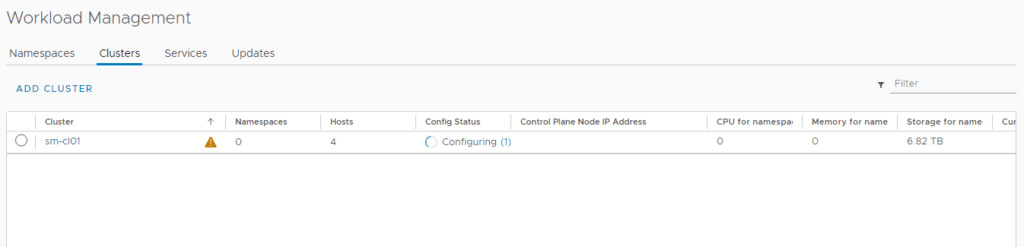

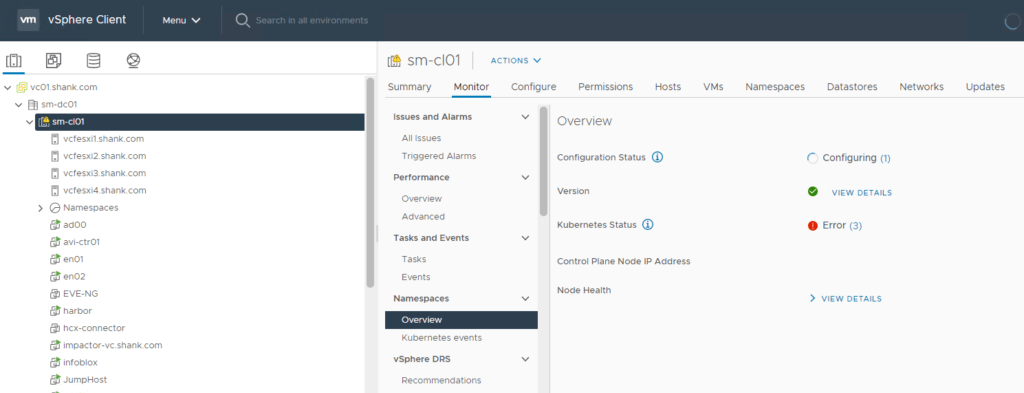

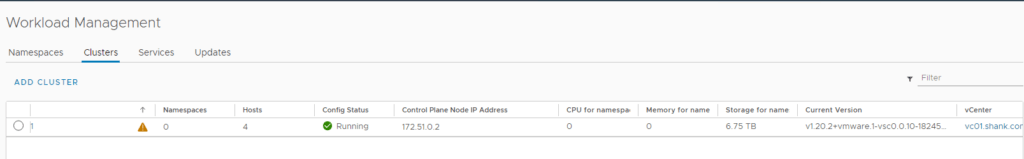

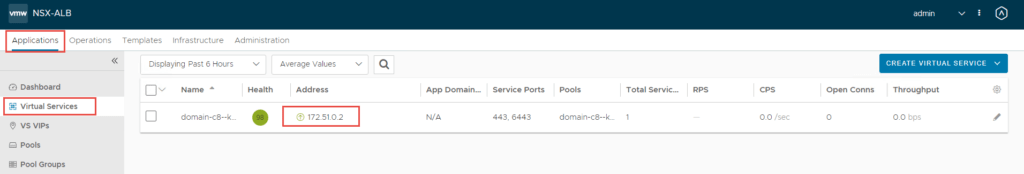

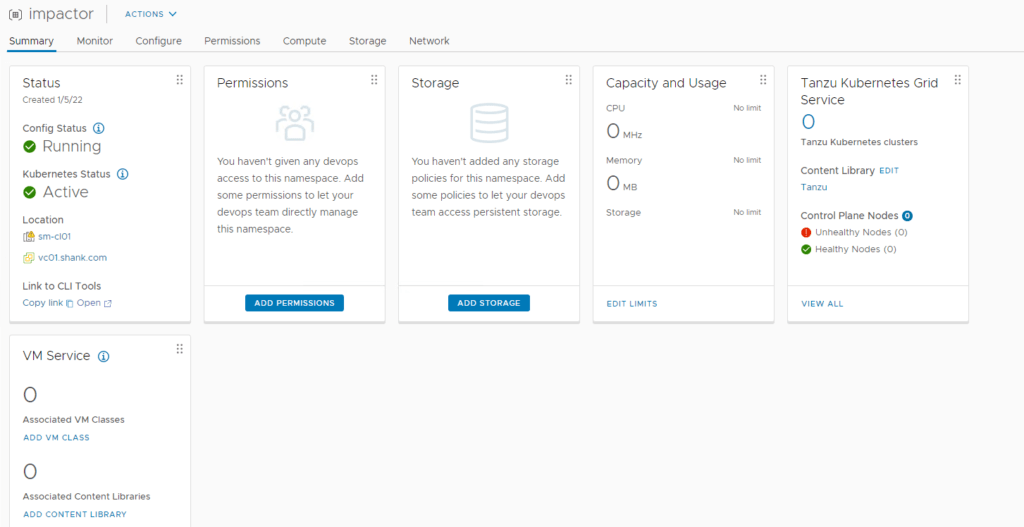

Once the deployment is complete, you should see a green tick and running under config status.

All three segments are connected to the same Tier-1 gateway “sm-edge-cl01-t1-gw01” and overlay transport zone “sm-m01-tz-overlay01”. Because the segments are connected to a Tier-1 gateway, it is implied that they are overlay networks and will utilize NSX-T logical routing. This is because VLAN backed segments cannot be attached to a Tier-1 gateway.

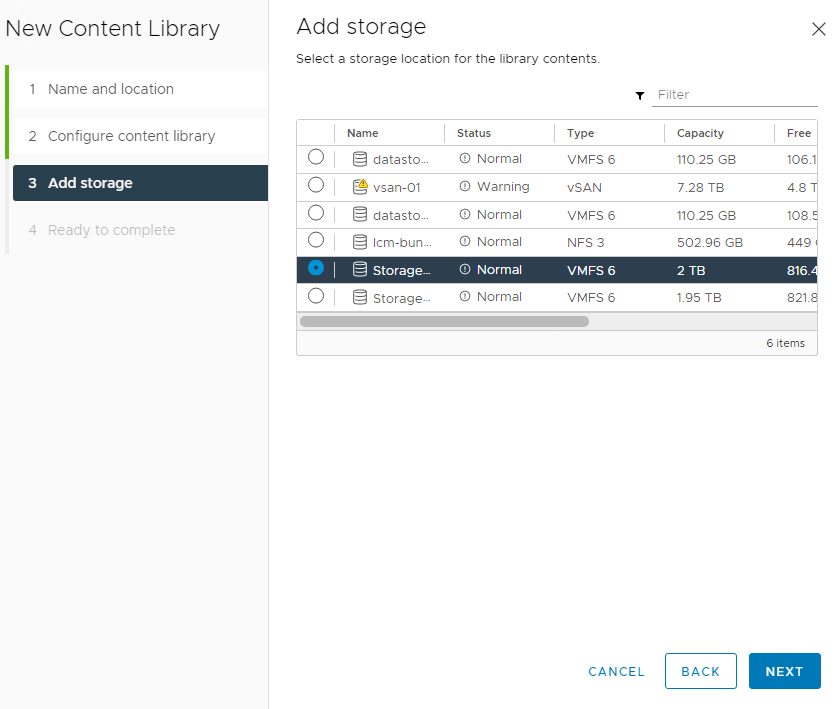

7. Select the storage device you would like the content to be stored on.

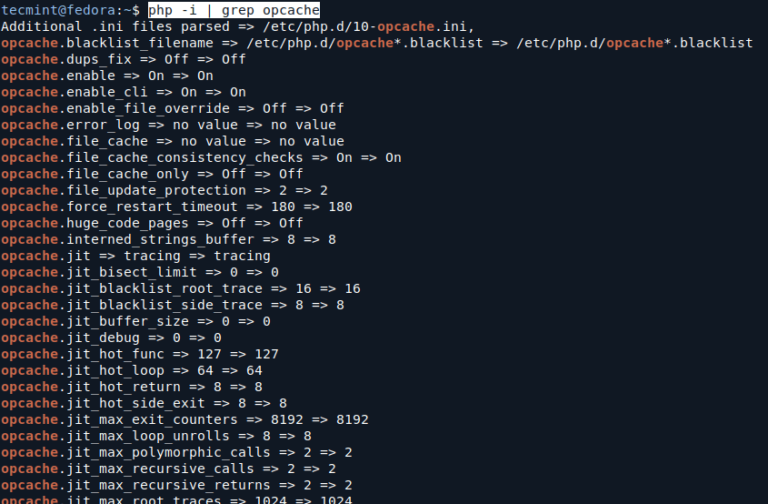

The nodes have been assigned with an IP address in the workload-tkg range.

3. Click Save once complete.

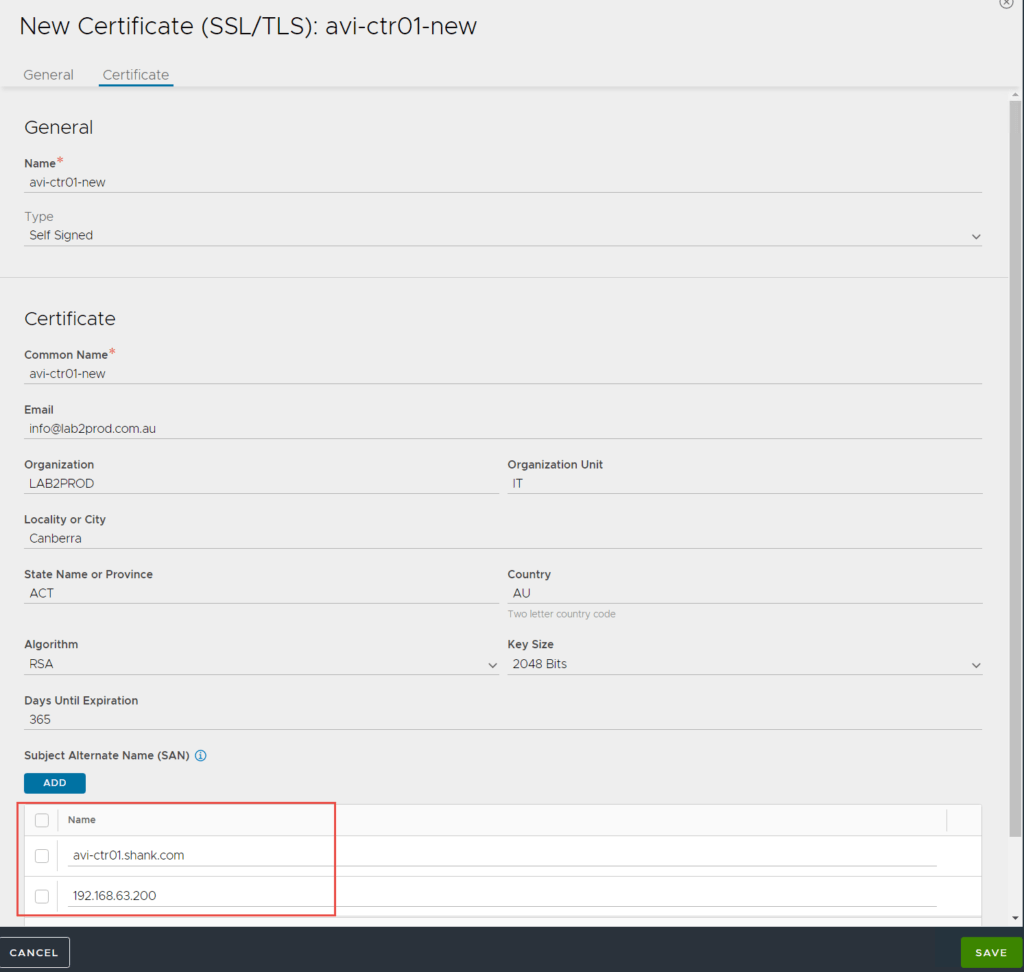

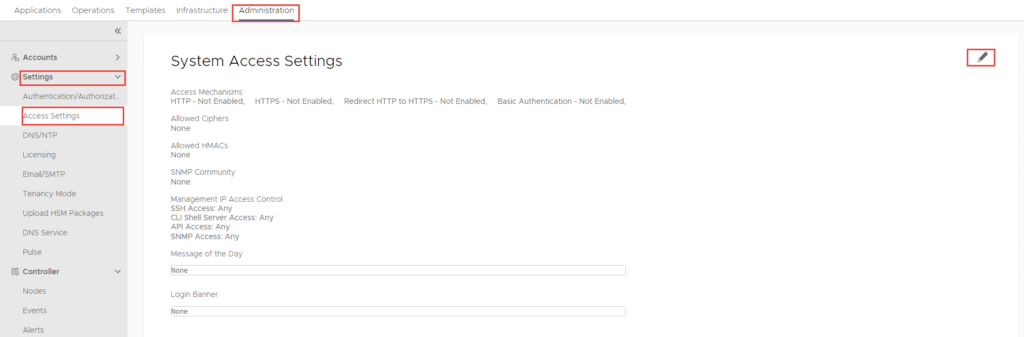

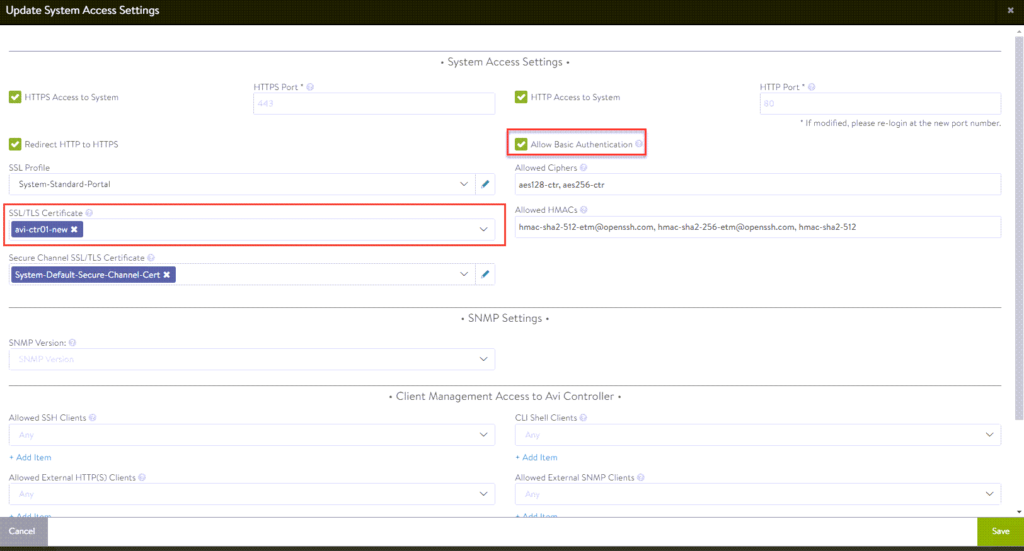

NSX-ALB Controller Certificate

NSX Application Platform Part 4: Deploying the Application Platform

- To configure a certificate select Templates -> Security -> SSL/TLS Certificates -> Create -> Controller Certificate

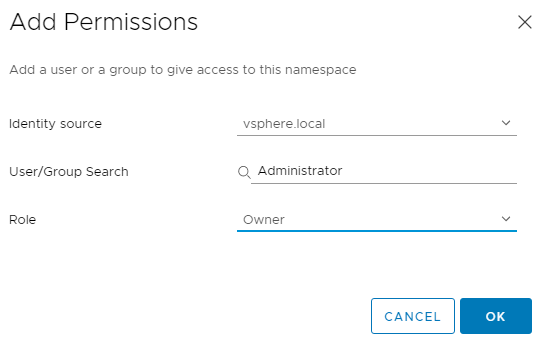

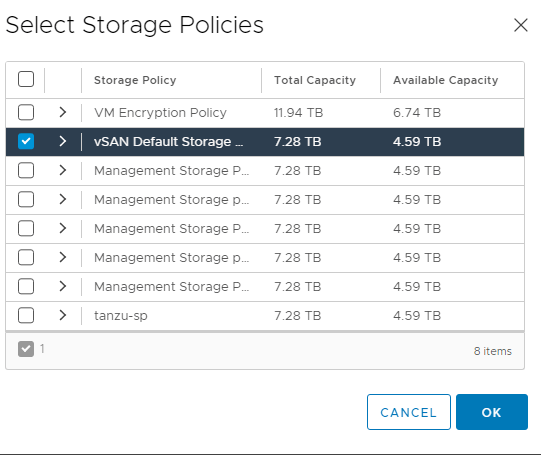

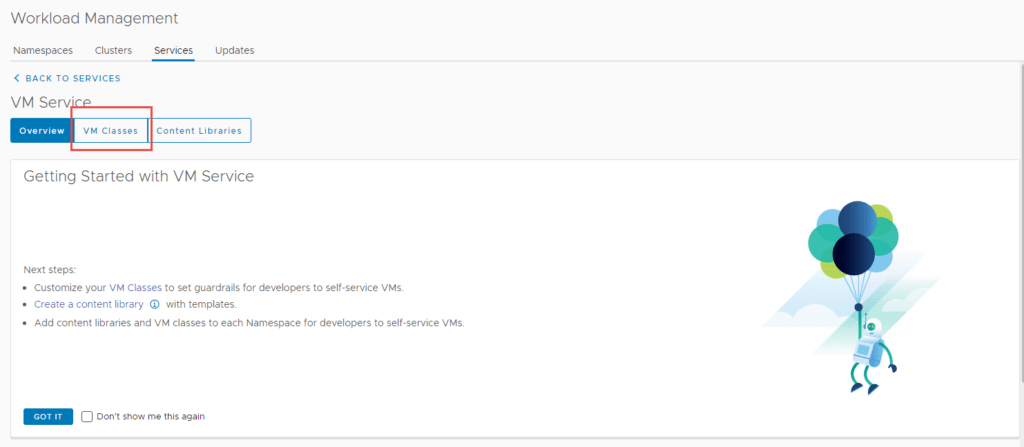

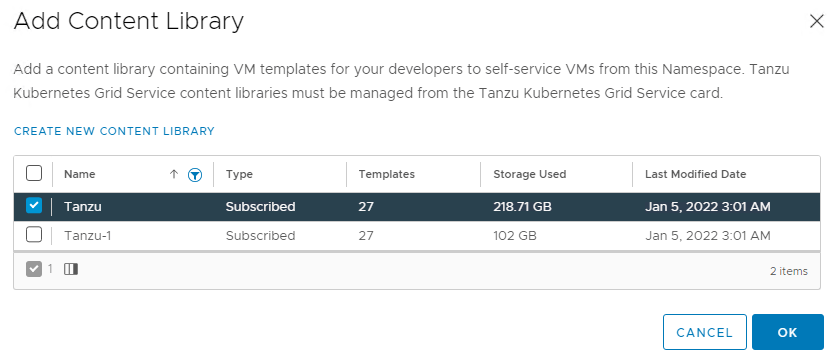

We will need to configure permissions, storage policies, VM classes and and a content library.

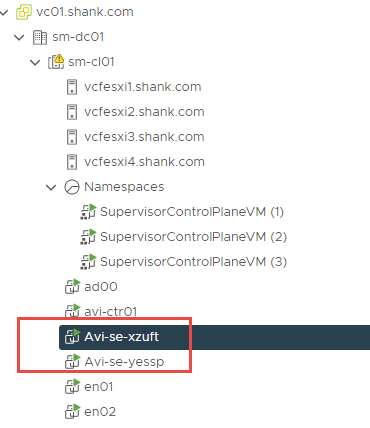

The cluster is deployed in vCenter.

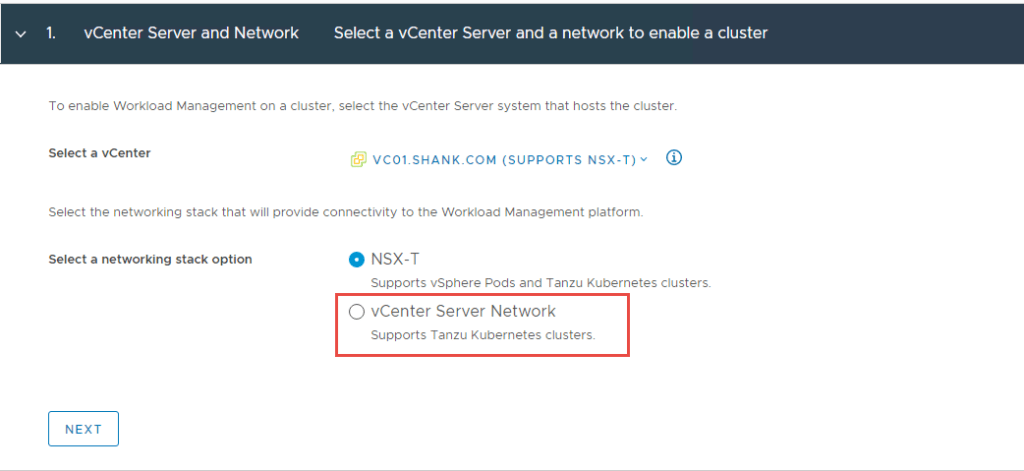

Note: if you have more than one vCenter in linked mode, ensure you have selected the right one.

Note: If you are running a cluster of NSX-ALB controllers, ensure you enter the FQDN and VIP for the cluster under SAN entries.

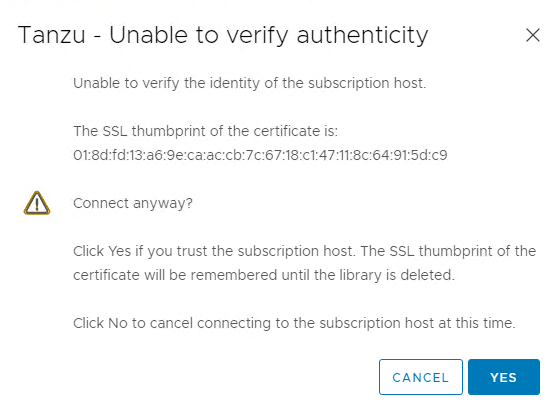

6. Click Next once complete. You will be prompted to accept the certificate, select Yes.

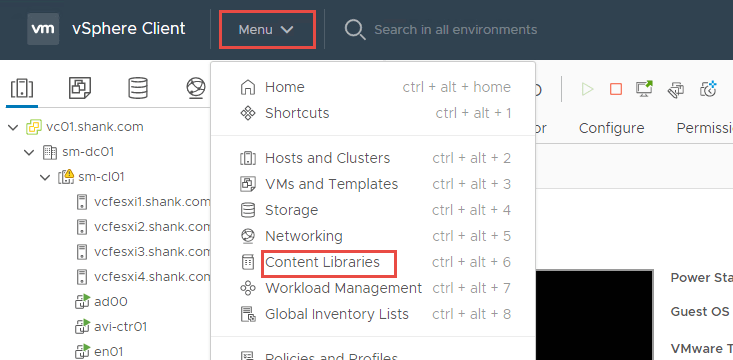

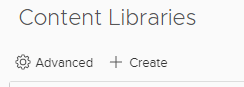

2. Click Create to define a new content library.

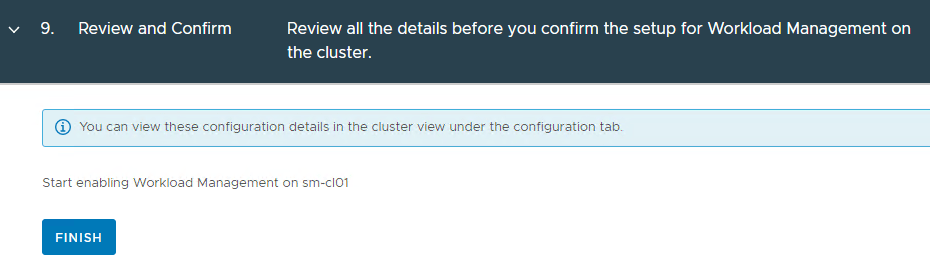

Note: This is your last change to change any configuration, if you go past this point and need to change something that can’t be changed later, you will need to disable and re-enable TKGS.

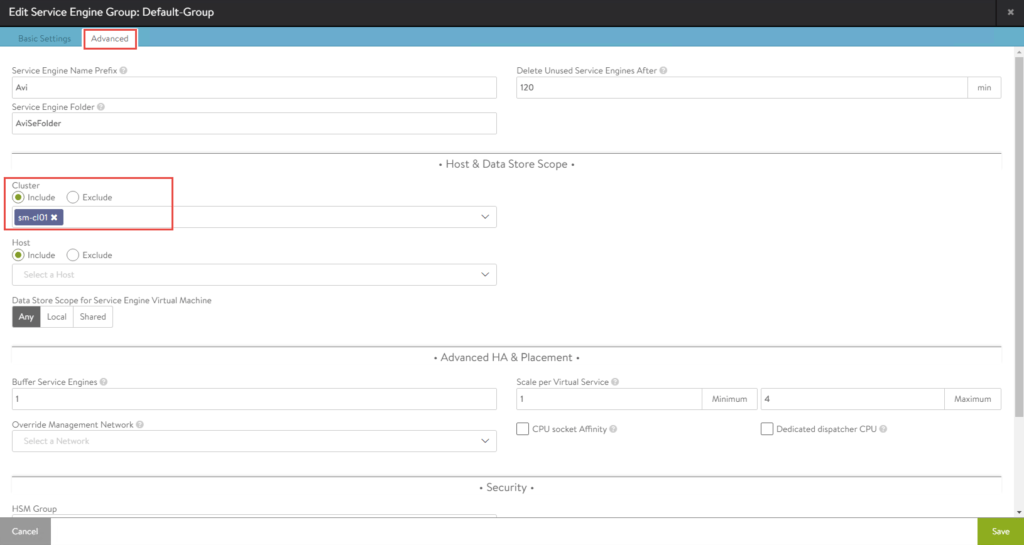

Service Engine Group Configuration

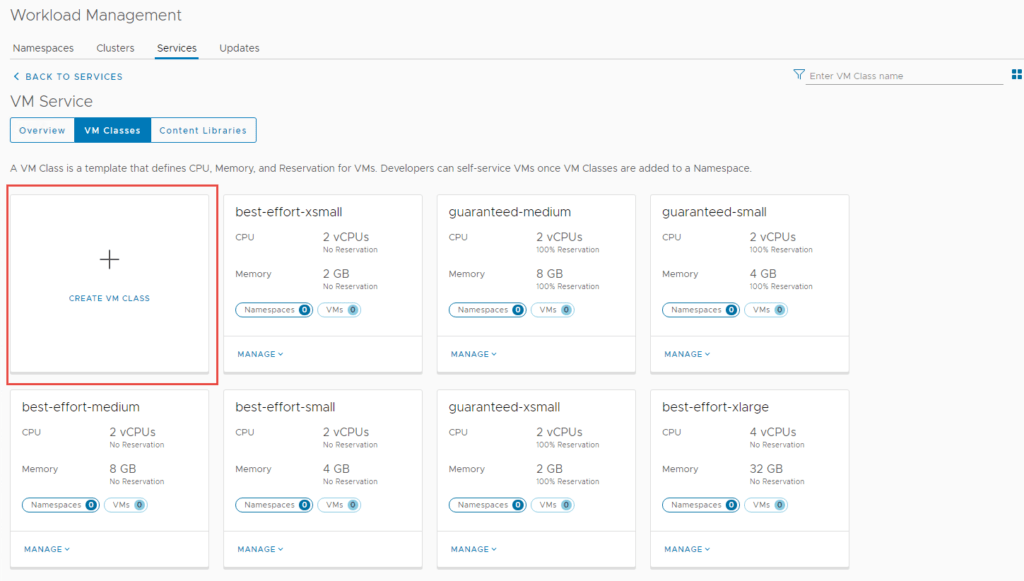

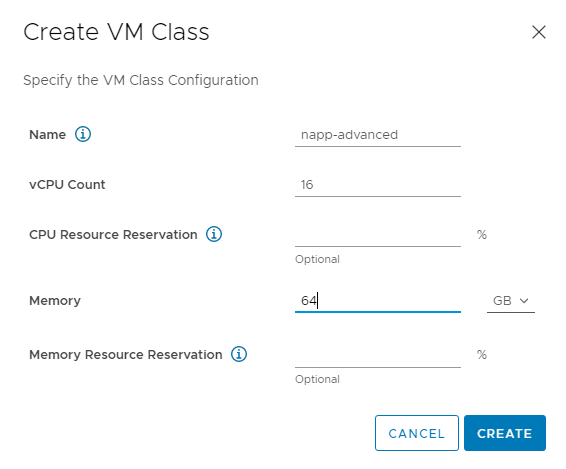

Depending on which mode of NAPP you want to deploy, you need to configure your VM classes accordingly. A table of the modes and their requirements can be found here. I will be deploying advanced, so will create a VM class to suit.

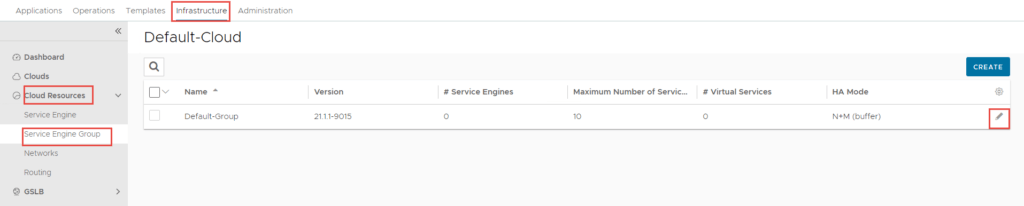

- To configure this, click on Infrastructure -> Cloud Resources -> Service Engine Group -> Edit Default-Group.

2. Select the Advanced tab, click Include and select the cluster.

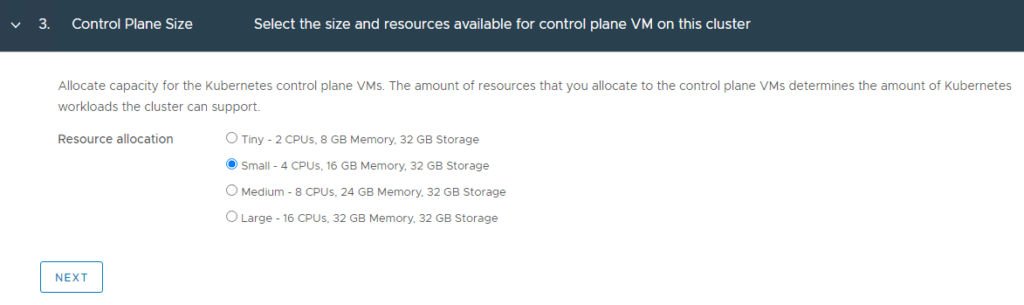

Note: I selected Small for my lab deployment. However, in production you might choose differently.

Configure the VIP Network

root@jump:/mnt/tanzuFiles# kubectl get virtualmachine -n impactor

NAME POWERSTATE AGE

impactorlab-control-plane-k4nnd poweredOn 12m

impactorlab-workers-5srcx-7c776c7b4f-bts8m poweredOn 8m29s

impactorlab-workers-5srcx-7c776c7b4f-kjr6j poweredOn 8m27s

impactorlab-workers-5srcx-7c776c7b4f-v98z8 poweredOn 8m28s