Introduction

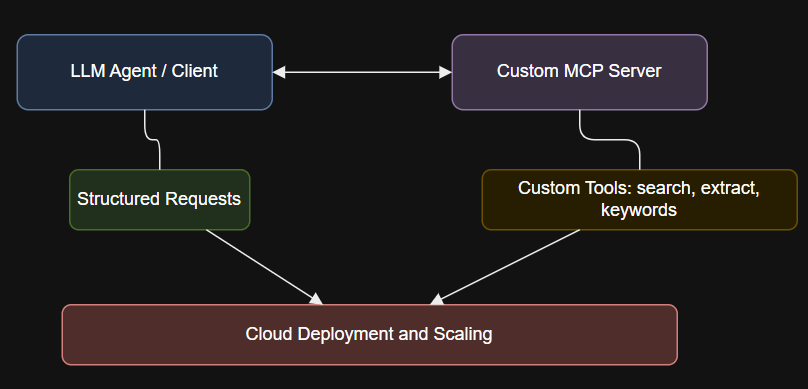

Traditional API integrations often require building custom connections for every tool and every AI agent—resulting in exponential complexity. MCP simplifies this:

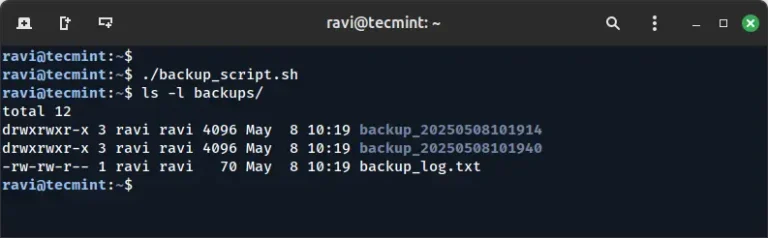

clarifai model upload

from fastmcp import FastMCP

from pydantic import Field

from typing import Annotated, Dict, Any, List

from serpapi import GoogleSearch

from newspaper import ArticleBefore MCP:

M models × N tools = many unique, hard-to-maintain connections.

clarifai model local-runner

After deployment, connect with FastMCP’s client to invoke your tools programmatically:

MCP Architecture: How It Works

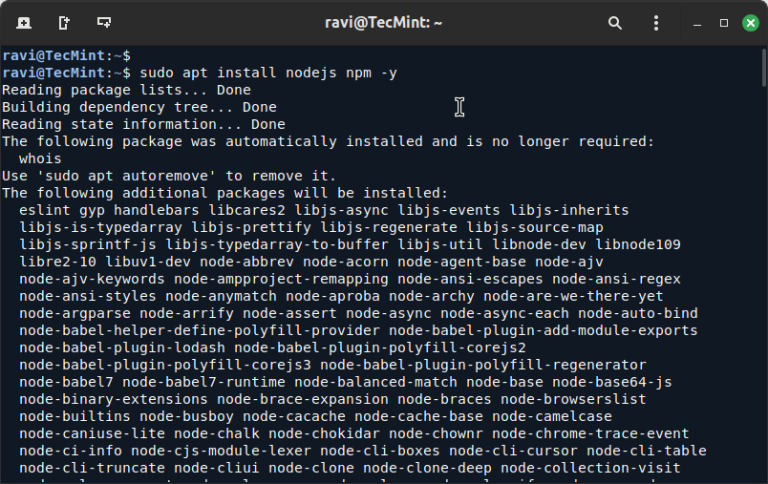

pip install fastmcp clarifai newspaper3k google-search-results pydantic

In this guide, you will learn how to build your own custom MCP server using FastMCP, test it locally, and deploy it to the Clarifai platform. By the end, you will be able to create a server that exposes specialized tools, ready to be used by LLMs and AI agents in production environments.

- Integrate with internal or proprietary APIs and systems

- Control the logic, validation, or data returned by their tools

- Optimize performance for specific workloads or compliance requirements

The Model Context Protocol (MCP) is revolutionizing how AI systems interact with external tools, APIs, and data sources. MCP offers a single, unified communication interface, think of it as the “USB-C” for AI integrations. Instead of building and maintaining dozens of unique API bridges, developers can use MCP to connect multiple tools to any compatible AI agent with minimal effort. This not only reduces development time and maintenance but also enables real-time, secure, and scalable access to complex workflows.

Step-by-Step: Building Your Own MCP Server with FastMCP

1. Install All Required Dependencies

clarifai model test-locally --mode container

6. Upload and Deploy Your MCP Server

from fastmcp import Client

from fastmcp.client.transports import StreamableHttpTransportA custom MCP server gives you complete flexibility, maintainability, and performance tuning tailored to your unique workflows.

Client: Any AI system or application (LLM agent, chatbot, workflow engine) that calls external functions.

Server: The MCP server, hosting callable tools (like “fetch sales data,” “run SQL query,” or “extract blog content”) and returning structured results.With MCP, you define a tool once and any compliant AI agent can use it. This shared protocol streamlines both the development and scaling of AI-enabled tools.@server.tool("extract_web_content_from_links",

description="Extracts readable article content from a list of URLs.")

def extract_web_content_from_links(urls: List[str]) -> Dict[str, str]:

extracted = {}

for url in urls:

try:

article = Article(url)

article.download()

article.parse()

extracted[url] = article.text[:1000]

except Exception as e:

extracted[url] = f"Error extracting: {str(e)}"

return extractedexport CLARIFAI_PAT="YOUR_PERSONAL_ACCESS_TOKEN_HERE"

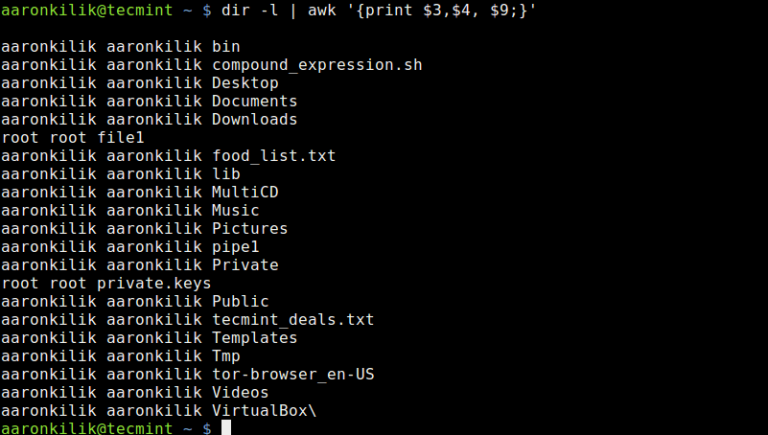

2. Project Structure

After MCP:

M models + N tools = scalable, protocol-driven integrations.

your_model_directory/

├── 1/

│ └── model.py

├── requirements.txt

├── config.yaml

model.pycontains your server logic and tool definitions.requirements.txtlists all Python packages.config.yamlholds deployment metadata for Clarifai.

3. Implement the MCP Server and Tools

@server.tool("keyword_research",

description="Performs keyword research and returns suggestions.")

def keyword_research(topic: str) -> List[Dict[str, Any]]:

autocomplete_params = {"api_key": SERPAPI_API_KEY, "engine": "google_autocomplete", "q": topic}

search = GoogleSearch(autocomplete_params)

suggestions = [item['value'] for item in search.get_dict().get('suggestions', [])[:5]]

return [{"keyword": k, "popularity": "N/A"} for k in suggestions] # Add trend integration as needed

ttps://api.clarifai.com/v2/ext/mcp/v1/users/YOUR_USER_ID/apps/YOUR_APP_ID/models/YOUR_MODEL_ID

By following this guide, you can develop, test, and deploy a custom MCP server tailored to your business or research needs. MCP’s protocol-first architecture allows your agents to access a universe of internal and external tools without custom integrations for every use case. Using frameworks like FastMCP, you can expose high-value functionality, ensure compatibility with leading AI models, and scale your deployments confidently.

Interacting with Your MCP Server

Off-the-shelf MCP servers exist for popular platforms like GitHub, Slack, or Notion. But many organizations need to:

@server.tool("multi_engine_search",

description="Search and return top 5 article/blog links.")

def multi_engine_search(query: str, engine: str = "google", location: str = "United States", device: str = "desktop") -> List[str]:

params = {"api_key": SERPAPI_API_KEY, "engine": engine, "q": query, "location": location, "device": device}

search = GoogleSearch(params)

results = search.get_dict()

return [result.get("link") for result in results.get("organic_results", [])[:5]]async with Client(transport) as client:

tools = await client.list_tools()

result = await client.call_tool("multi_engine_search", {"query": "AI in medicine"})

print(result)

MCP in the Real World

Conclusion

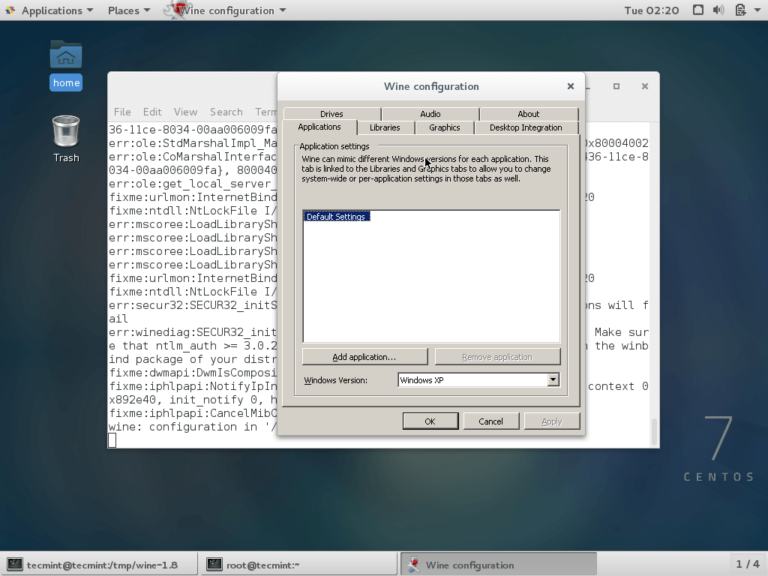

build_info:

python_version: '3.12'

inference_compute_info:

cpu_limit: '1'

cpu_memory: 1Gi

num_accelerators: 0

model:

app_id: YOUR_APP_ID

id: YOUR_MODEL_ID

model_type_id: mcp

user_id: YOUR_USER_ID

requirements.txt Example:

clarifai

fastmcp

mcp

requests

lxml

google-search-results

newspaper3k

anyio

5. Test Your MCP Server Locally

You can use the Clarifai CLI to simulate and debug your server: