We will cover the following techniques, with step-by-step Python code for each:

print(“Original Data:n”, X.ravel())

print(“Robust Scaled:n”, X_trans.ravel())

Why Advanced Feature Scaling Matters

This article explores four advanced feature scaling techniques, explaining each concept and providing hands-on Python examples for immediate application.

Four Advanced Feature Scaling Methods in Python

Basic scaling methods assume well-behaved, normally distributed data. However, when data contains outliers, heavy skew, or multimodal structure, these approaches may fail to produce useful results. Advanced scaling methods are designed to address these complexities, making your features better suited for modern algorithms and analytics.

- Quantile Transformation

- Power Transformation

- Robust Scaling

- Unit Vector Scaling

1. Quantile Transformation

print(“Original Data:n”, X.ravel())

print(“Quantile Transformed (Normal):n”, X_trans.ravel())

Example: Mapping to a normal distribution

print(“Original Data:n”, X.ravel())

print(“Power Transformed (Box-Cox):n”, X_trans.ravel())

Example: Box-Cox on positive dataOutput:

Original Data:

[1. 2. 3. 4. 5.]

Power Transformed (Box-Cox):

[-1.50 -0.64 0.08 0.73 1.34]

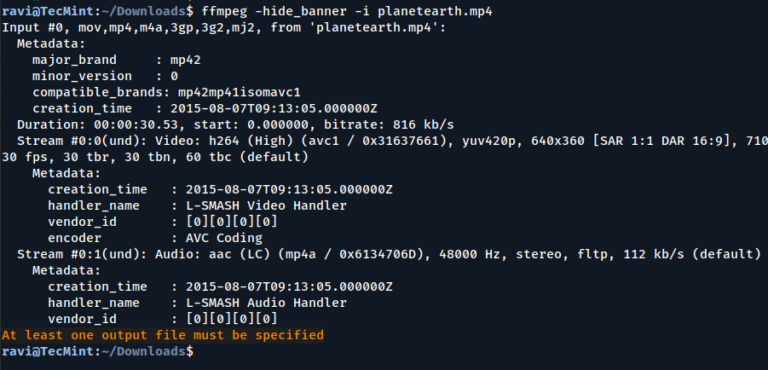

Original Data:

[ 10 20 30 40 1000]

Robust Scaled:

[-1. -0.5 0. 0.5 48.5]

The outlier’s effect is significantly diminished, making your data more reliable for modeling.