Despite the revolutionary agentic delivery mechanism used to achieve groundbreaking speed and scale in this attack, the underlying techniques and tools used were surprisingly conventional. The adversary didn’t develop exotic zero-day exploits; in fact, it was the opposite. Anthropic notes:

“The cybersecurity community needs to assume a fundamental change has occurred: Security teams should experiment with applying AI for defense in areas like SOC automation, threat detection, vulnerability assessment, and incident response and build experience with what works in their specific environments.”1

While AI-powered defenses are essential for countering AI-powered adversaries, defenders must also recognize that enterprise AI systems represent vulnerable targets. As employees embrace AI tools for productivity and organizations rapidly deploy their own AI software and infrastructure, they’re creating new attack surfaces that require dedicated security controls including:

Adversaries Are Embracing the AI Era

This astounding figure should be an industry wakeup call. This isn’t incremental improvement; it’s exponential transformation. Defenders relying on human-speed responses in this new AI era will find themselves outpaced and outmaneuvered.

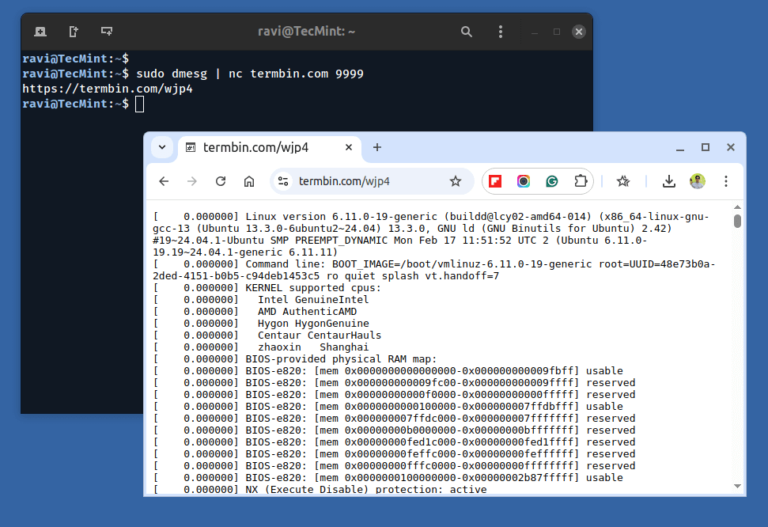

“The human operator tasked instances of Claude Code to operate in groups as autonomous penetration testing orchestrators and agents, with the threat actor able to leverage AI to execute 80-90% of tactical operations independently at physically impossible request rates.”

We are witnessing a transformative moment in cybersecurity. Threat actors have historically needed to manually trigger and validate each phase of an attack, including running reconnaissance scans, identifying vulnerabilities, crafting exploits, executing intrusions, and triggering data exfiltrations. This constraint limited the speed and scale of attacks. Agentic AI, now used adversarially, has shattered these limitations:

A Novel Attack Tempo With Familiar Techniques

1. Anthropic. Disrupting the First Reported AI-Orchestrated Cyber Espionage Campaign. Anthropic, Nov. 2025: https://assets.anthropic.com/m/ec212e6566a0d47/original/Disrupting-the-first-reported-AI-orchestrated-cyber-espionage-campaign.pd.

“The operational infrastructure relied overwhelmingly on open source penetration testing tools rather than custom malware development. Standard security utilities including network scanners, database exploitation frameworks, password crackers, and binary analysis suites comprised the core technical toolkit.”

CrowdStrike Charlotte Agentic SOAR, for example, is enhanced with AI-powered decision-making and can automatically execute response playbooks the moment threats are detected. When an AI agent attempts lateral movement, CrowdStrike can automatically isolate the affected endpoint, terminate malicious processes, and contain the threat before the adversary’s automation can pivot to the next target.

Words Are Weapons: The Prompt Injection Threat

Anthropic’s Threat Intelligence team recently uncovered and disrupted a sophisticated nation-state operation that weaponized Claude’s agentic capabilities and the Model Context Protocol (MCP) to orchestrate automated cyberattacks simultaneously against multiple targets worldwide. This AI-powered attack automated reconnaissance, vulnerability exploitation, lateral movement, and more across multiple victim environments at unprecedented scale and speed. We commend Anthropic for their swift response, their transparency in sharing detailed findings with the community, and for issuing a critical call to action to defenders worldwide:

The Falcon platform provides comprehensive protection for securing workforce use of AI and enterprise AI deployments with AI Detection and Response. This is a watershed moment in cybersecurity and the path forward is clear: Fight fire with fire. Security teams that embrace AI-powered defenses and work diligently to secure the use and deployment of AI within their own organizations will be well positioned to face this brave new world.

One of the most notable aspects of this attack is how the adversary initially gained Claude’s cooperation. They didn’t exploit a software vulnerability or bypass authentication controls, but instead used prompt injection to circumvent model guardrails. As Anthropic explains: “The key was role-play: the human operators claimed that they were employees of legitimate cybersecurity firms and convinced Claude that it was being used in defensive cybersecurity testing.”

This is both reassuring and alarming. Reassuring, because existing detection and response strategies and tools still have significant relevance and value here. Network scanners, for example, will still generate recognizable traffic patterns even if they are triggered by an agent. The TTPs that SOC teams have spent years learning to detect haven’t become obsolete overnight. However, traditional defensive technologies and strategies will only have relevance in this fight if they’re augmented with machine automation that can match and counter an adversary’s speed with AI-powered alert validation, triage, orchestration, and response.

The vision of the agentic SOC isn’t about replacing security analysts, it’s about augmenting them with automation capabilities that match those used by adversaries. CrowdStrike customers are already leveraging the CrowdStrike Falcon® platform’s AI-powered capabilities to fight fire with fire. Our CrowdStrike® Charlotte AI™ security analyst operates as a force multiplier for SOC teams by providing autonomous threat detection, investigation, and response capabilities that operate at the speed and scale required to counter AI-powered adversaries.

Enterprises building and deploying their own AI systems must also recognize that these systems can be manipulated and weaponized through prompt injection. Traditional security controls like firewalls, antivirus, and access controls don’t protect against an adversary who can successfully persuade an AI system to return information or perform an action against its intended design and constraints.

Match Adversary Capability with the Agentic SOC

The attack surface has expanded to the semantic layer. We’ve spent decades defending endpoints, applications, networks, identities, and cloud environments; now, it’s time to defend sentences.

This demands a new category of security controls specifically designed for AI systems: prompt injection detection, context validation, output filtering, and behavioral monitoring of AI interactions. Enterprises must implement guardrails that verify the legitimacy of requests, validate that AI actions align with authorized use cases, and detect when AI systems are being manipulated to perform unauthorized activities.

CrowdStrike maintains the industry’s most comprehensive taxonomy of prompt injections through its acquisition of Pangea, tracking more than 150 different prompt injection techniques.

Enterprise AI Systems: The New Critical Infrastructure

The message is clear: To defeat AI-powered adversaries, we must fight fire with fire.

- Prompt Injection Defenses: Implement input validation and sanitization for all prompts sent to AI systems. Deploy prompt injection detection that identifies attempts to manipulate AI behavior through techniques like role-playing. Establish clear boundaries for what AI systems are authorized to do and implement technical controls that prevent unauthorized actions regardless of how convincingly they’re requested.

- Context Validation and Authorization: Verify that AI interactions occur within legitimate business contexts. Implement authentication and authorization controls that validate the identity of users interacting with AI systems and ensure their requests align with their authorized roles and responsibilities.

- Input and Output Filtering and Monitoring: Monitor AI system inputs and outputs for sensitive data exposure, malicious content, or other harmful content. Implement filtering mechanisms that prevent AI systems from generating dangerous outputs even if prompted to do so. Log all AI interactions for security monitoring and forensic analysis.

- Secure AI Development Lifecycle: Integrate security into AI system development from the beginning. Conduct threat modeling for AI applications, perform security testing including adversarial testing of AI systems, instrument runtime guardrails, and maintain an inventory of all AI systems deployed across your environment.

Prompt injection, whereby adversaries use prompt instructions to cause malicious or unwanted model behavior, is the number one risk in the OWASP Top 10 Risks for LLM Applications as it represents a potential front door into enterprise AI systems that demands vigorous defense.

Additional Resources

Customers using Charlotte AI and the Falcon platform’s AI-powered capabilities report dramatic improvements in mean time to detect and mean time to respond, often reducing investigation times from hours to minutes and enabling automated response to threats that would previously require manual analyst intervention.