Introduction

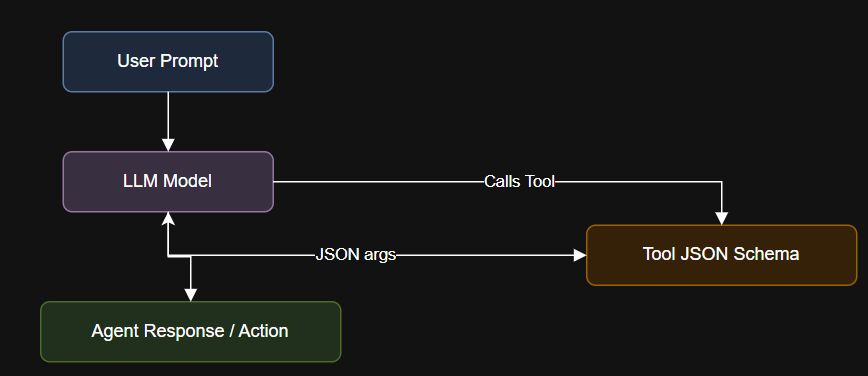

Function calling is the bridge between static LLMs and real-time agents. With tools like LangChain and CrewAI now natively supporting OpenAI’s 0613 schema, it’s easier than ever to integrate live business logic into AI pipelines.

tools = [

{

“name”: “get_weather”,

“description”: “Returns weather for a given city.”,

“parameters”: {

“type”: “object”,

“properties”: {

“city”: {“type”: “string”}

},

“required”: [“city”]

}

}

]

def get_weather(city: str):

return f”Weather in {city} is 72°F and sunny.”

What Is Function Calling in LLMs?

Function calling enables an LLM to return structured JSON outputs rather than plain text, allowing downstream systems to act on the result.

Guide on OpenAI JSON Output: https://www.rohan-paul.com/p/openai-functions-vs-langchain-agents

from langchain.agents import create_openai_functions_agent

from langchain.llms import OpenAI

Implementation

{

“function_call”: {

“name”: “get_weather”,

“arguments”: “{ ”city”: ”Paris” }”

}

}

But what does “production-grade” really mean when it comes to LLM toolchains?

LangChain Function Calling Docs: https://python.langchain.com/docs/how_to/function_calling/

Let’s break down the actual syntax, versioning, and interfaces available in today’s top LLM libraries, using LangChain v0.3.27, OpenAI’s 0613 function schema, and real examples from enterprise deployments.

llm = OpenAI(model=”gpt-4-0613″)

agent = create_openai_functions_agent(llm, tools=tools)

response = agent.run(“What’s the weather in Paris?”)

print(response)

Output Format

LangChain + OpenAI

Key Design Questions

- Are you validating arguments passed to your tools?

- Should your tools call external services (e.g., weather APIs) or stay offline?

- What should happen if the model calls a function incorrectly?

- Are you distinguishing function calling from newer structured output modes in OpenAI?

Function Calling Flow Diagram

LangChain vs OpenAI Native SDK

| Feature | LangChain | OpenAI Native SDK |

|---|---|---|

| Tool registration | Python dict / schema-based | Direct function schema (JSON) |

| Response handling | Auto JSON parsing in agent loop | Manual parsing and function call |

| Multi-tool invocation | Supported with call routing | Not native; only one per turn |

| Built-in retry logic | With agent + memory middleware | Custom implementation needed |

According to OpenAI’s documentation:

- CrewAI Versioning: CrewAI supports OpenAI-style function calls as of version 0.8.0+, often in tandem with Litellm. Earlier versions may not fully support multi-agent invocation pipelines.

- Structured Outputs: OpenAI’s newer structured outputs mode enforces stricter schema validation than basic tool calling. You may want to compare both depending on your use case.

- Security Notice: When implementing dynamic code execution or real-time service calls, ensure proper sandboxing and validation to prevent injection vulnerabilities.

External Resources

“Tool calling allows a model to intelligently decide to call functions you’ve defined. These functions return arguments in a structured JSON format.”

OpenAI Function Calling: https://platform.openai.com/docs/guides/function-calling

Additional Considerations

Summary

Are you building AI agents that interact with APIs, run functions, or query real-world services? Then you’re already entering the world of LLM-based function calling.

In the next article, we’ll move from function calling into building intelligent agents, covering task planning, memory, and real-world decision-making models.